如何写学术论文的rebuttal?

请教一下如何写学术论文(会议论文,以CVPR等为例)的rebuttal?特别是reviewer说你的方法很ad hoc ? 有成功过的人士可以指点一下吗?

电光幻影炼金术(香港中文大学 CS PhD在读)回答:

rebuttal的目的:首要的目的是说服AC,其次目的是说服中间的审稿人,保住正面审稿人,再次的目的是说服负面审稿人。

对于分数不太理想的同学,rebuttal是通往下次投稿的土壤。我的节目叫从审稿到中稿,一个核心的观点就是中稿的过程是很平滑的,可以逐次提升的。暂时意见不好不要灰心。

对于新手而言,不要怕坚持投稿/recycle

明确以下是核心问题:Novelty,能否超过baseline,政策问题(抄袭,重投),实验对错的问题。总而言之就是一个硬指标:能否推动前人的研究成果。

明确一些是次要问题:部分超参数,比较的一些小模块,一些怀疑。

2. 态度和口吻

不要起状态,展现出自己是合理和理性的人。

不要质疑审稿人的能力。

如果说审稿人不理解/文章有不清楚的地方,解释就好了。

即便很傻,问到也要回答。

不要总是道歉。但是也是可以道歉的:概念性的问题/写作的问题。AC往往是比较重视概念的。

不要过分倾向于积极的审稿人。不要把积极的审稿人和消极的审稿人对立起来。直接针对最客观最本质的问题回答。不要耍政治斗争/狼人杀的小聪明。

经常出现的情况,积极的审稿人说写作很好,消极的审稿人说写作根本看不懂。一定是有则改之,无则加勉。避免抨击审稿人。比如说审稿人犯了事实性的错误,那可能会完全失去这个审稿人。可以直接把事实讲出来。

避免说审稿人说错了之类的,直接把正确的东西说出来就对了。

尽量多引用一些文章,引经据典。

3、关于写作

要有一个清晰的结构,很容易定位信息。

短小精悍。

直面问题,不要指代。反面例子:审稿人问:novelty是什么?作者回答:novelty在方法部分。

正确的回答:就直接说novelty是XX模块,别人没用过。或者说novelty是提出并解决了XX问题,别人没做过。

反面例子:审稿人问:实验为什么这么设置?作者回答:看Sec3.5。

正确的回答:把来龙去脉用两三句话讲一下,我这么设置是因为别人的文章也这么做了。或者说在验证集中比较好的参数。

了解审稿人的隐含义。比如说上面这个例子,审稿人的隐含义:别人的参数都不这么设置的。

请人帮忙读一下/改一下rebuttal。

Grammarly。一般来讲grammarly改完之后比人要好。

要把负面的东西正面化。

共性的问题可以提炼出来回答。

不要说final version,要说revised version。

不要说we acknolwledge you / we inform you,要说we agree

尽量回答所有的问题,至少要清晰的回答主要的问题,并且不违背其他原则。

审稿人的标号要清晰。

老问题:是按照审稿人组织还是按照问题组织。我的经验是先回答重要的共性的问题,然后按照审稿人组织。

不要有太多的结论和前缀。

把容易胜利的点和重要的点放在前面。

写作完后最后过一遍。

保证每句话都是self-contained的。

4. 关于实验这块

rebuttal想翻盘全靠实验,原地干拔(指干吵架,空口解释)根本行不通。

补充的实验不理想怎么办?不理想不怕,看对你文章是不是致命打击。

如果审稿人提的实验已经有,可以指出,但是要有概括。不能说你看XX地方去,要把设计实验->发现-->排除其他原因>结论的逻辑捋清楚。

5. 常见的坑点

不要说看起来容易做起来难。

不要发牢骚。

尽量不要举报审稿人 (ac letter)。(1)AC本身就是负面的审稿人。(2)负面审稿人是AC邀请的。除非很明显的问题:deep learning is just some tricks/ is not good as some arxiv paper.

避免想象,要有事实依据。比如说我的文章十年之后会有影响力/我的文章之后会解决这个问题。Don't do science fiction。

不要作不合理的推测。比如审稿人是瞧不起中国人/瞧不起做这个领域的人/这个审稿人之前审过我的文章。

6. 外部资源-模板-示范

http://www.siggraph.org/s2009/submissions/technical_papers/faqs.php#rebuttal

Rebuttal 模版

We sincerely appreciate the constructive suggestions from reviewers.

General response: First, new experiments on Atari found that our methods can improve both A3C and TreeQN on 37 (32 added) out of 50 (44 added) games while achieving comparable results on other games. Second, our main contribution is to show that one can abstract routines from a single demonstration to help policy learning. The major difference between LfD approaches and ours is typical LfD methods do not learn reusable and interpretable routines. Third, the most similar work in the LfD domain is ComPILE (Kipf et al. 2019), but we significantly outperform them in data efficiency. Furthermore, we added experiments to generalize our approach to the continuous-action domain.

1. Experiments on Atari games (R2, R4, R5)

We tested on 44 more Atari games using the setting in Sec 4.2 and outperformed the baselines. The relative percentage improvement brought by routines for TreeQN / A3C is (calculated by (our score - baseline score) / baseline score * 100):

Ami:13/21, Ass:8/18, Asterix:12/-3, Asteroids:8/2, Atl:-1/18, Ban:4/-3, Bat:10/-1, Bea:23/7, Bow:3/0, Box:-4/19, Bre:16/6, Cen:-9/10, Cho:13/17, Dem:19/7, Dou:20/0, Fish:0/5, Fre:8/0, Fro:9/17, Gop:11/5, Gra:2/4, Hero:11/7, Ice:-1/0, James:21/15, Kan:18/-7, Kung-Fu:10/26, Monte:3/2, Name:17/13, Pitfall:-4/-11, Pong:4/9, Private:19/15, River:21/30, Road:15/19, Robot:1/0, Seaquest:-9/-3, Space:24/19, Star:29/19, Ten:0/-1, Time:23/9, Tutan:18/6, UpN:7/16, Vent:9/17, Video:23/26, Wizard:13/9, Zax:-1/-7.

2. Baselines of LfDs (R2, R5)

We included an LfD baseline, behavior cloning, in Sec 4.1.2. We further compared ours to ComPILE based on their open-source implementation. We trained a ComPILE model on one single demonstrationon Atari and tested under the Hierarchical RL setting (other details are the same as their paper’s Sec 4.3). We found that their approach deterioratesthe A3C baseline on 50 Atari games from 5% to 34% (13% on average). We speculate that this is because their model relieson a VAE to regenerate the demonstration, and the model over-fits to the few states appeared in the available demonstration. Even on the simple grid-world cases presented in their paper, they need 1024 instances of demonstrationsto train the VAE, which reveals the data inefficiency of their approach.

3. Continuous-action environments (R4)

We have extended our methods to high-dimensional continuous-action cases. Specifically, we conducted experiments on the challenging car-racing environment TORCS. We define routines as components in the programmatic policy (refer to PROPEL from Verma et al. 2019). In the routine proposal phase, we do not use Sequitur but use an off-the-shelf program synthesis introduced by Verma et al. 2018. We also evaluate routines by frequency and length. Note that too similar routines would be counted as the same when calculating frequency. During routine usage, those abstracted routines can not only accelerate search but also improve Bayesian optimization. On the most challenging track AALBORG, by combining our proposed routine learning policy, our car could run 16% faster than the SOTA PROPELPROG (from 147 sec/lap to 124 sec/lap) and reduce the crashing ratio from 0.12 to 0.10.

4. Size of action space vs. training speed and performance (R2, R4)

By controlling the number of selected routines (~3 routines), training could still be accelerated, not slowed. We have demonstrated this in Supplementary Sec 3.1.3.

5. CrazyClimber for A3C (R2, R4)

In practice, one may use small budget training (e.g., train PPO for 1M steps) to decide which routines to add to the action space. We found that this technique can help improve the A3C baseline for 5% on CrazyClimber.

6. Prior knowledge on when to use routines (R2)

We identified all routines from the demonstration and added a loss to map the corresponding state to routine (as used in DQfD). In Qbert, Krull, and CrazyClimber, the relative percentage improvement of this is -6, 10, -4. We will add a paragraph to illustrate this, and we are looking forward to better ways to learn when to use routines.

7. Show usage of routine scores (R5)

We have included examples of routine abstraction in Supplementary Table 1. We would specify scoring examples in routine abstraction in the revision.

8. Baseline PbE (R4)

Although the PbE does not directly fetch proposals from demonstrations, it leverages the demonstration to evaluate routines and choose the best ones. We will provide detailed routine scores to illustrate this.

9. Figure/text suggestions (R4)

Thanks. We will revise the teaser figure, texts, and captions accordingly.

涂存超(清华大学 计算机科学PhD)回答:

作为一名高年级博士生,会议rebuttal的经历颇为丰富,也在这个过程中总结出了些许经验,供各位参考。

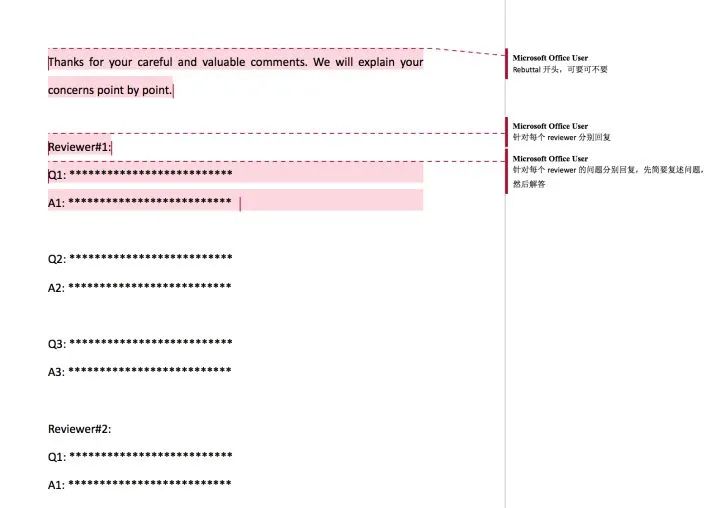

1. Rebuttal的基本格式

一般rebuttal都有比较严格的篇幅要求,比如不能多于500或600个词。所以rebuttal的关键是要在有限的篇幅内尽可能清晰全面的回应数个reviewer的关注问题,做到释义清楚且废话少说。目前我的rebuttal的格式一般如下所示:

其中,不同reviewer提出的同样的问题可以不用重复回答,可以直接"Please refer to A2 to reviewer#1"。结构清晰的rebuttal能够对reviewer和area chair提供极大的便利,也便于理解。

2. Rebuttal的内容

Rebuttal一定要着重关注reviewer提出的重点问题,这些才是决定reviewer的态度的关键,不要尝试去回避这种问题。回答这些问题的时候要直接且不卑不亢,保持尊敬的同时也要敢于指出reviewer理解上的问题。根据我的审稿经验,那些明显在回避一些问题的response只会印证自己的负面想法;而能够直面reviewer问题,有理有据指出reviewer理解上的偏差的response则会起到正面的效果。(PS: 如果自己的工作确实存在reviewer提出的一些问题,不妨表示一下赞同,并把针对这个问题的改进列为future work)

面对由于reviewer理解偏差造成全部reject的情况,言辞激烈一点才有可能引起Area Chair的注意,有最后一丝机会,当然,最基本的礼貌还是要有,不过很有可能有负面的效果,参考今年ICLR LipNet论文rebuttal https://openreview.net/forum?id=BkjLkSqxg。

3. Rebuttal的意义

大家都知道通过rebuttal使reviewer改分的概率很低,但我认为rebuttal是一个尽人事的过程,身边也确实有一些从reject或borderline通过rebuttal最终被录用的例子。尤其像AAAI/IJCAI这种AI大领域的会议,最近两年投稿动则三四千篇,这么多reviewer恰好是自己小领域同行的概率很低,难免会对工作造成一些理解上的偏差甚至错误,此时的rebuttal就显得特别重要。所以对于处于borderline或者由于错误理解造成低分的论文,一定!一定!一定!要写好rebuttal!

最后贴一下LeCun在CVPR2012发给pc的一封withdrawal rebuttal镇楼(该rebuttal被pc做了匿名处理),据说促成了ICLR的诞生,希望自己以后也有写这种rebuttal的底气:)

Hi Serge,

We decided to withdraw our paper #[ID no.] from CVPR "[Paper Title]" by [Author Name] et al.

We posted it on ArXiv: http://arxiv.org/ [Paper ID] .

We are withdrawing it for three reasons: 1) the scores are so low, and the reviews so ridiculous, that I don't know how to begin writing a rebuttal without insulting the reviewers; 2) we prefer to submit the paper to ICML where it might be better received; 3) with all the fuss I made, leaving the paper in would have looked like I might have tried to bully the program committee into giving it special treatment.

Getting papers about feature learning accepted at vision conference has always been a struggle, and I've had more than my share of bad reviews over the years. Thankfully, quite a few of my papers were rescued by area chairs.

This time though, the reviewers were particularly clueless, or negatively biased, or both. I was very sure that this paper was going to get good reviews because: 1) it has two simple and generally applicable ideas for segmentation ("purity tree" and "optimal cover"); 2) it uses no hand-crafted features (it's all learned all the way through. Incredibly, this was seen as a negative point by the reviewers!); 3) it beats all published results on 3 standard datasetsfor scene parsing; 4) it's an order of magnitude faster than the competing methods.

If that is not enough to get good reviews, I just don't know what is.

So, I'm giving up on submitting to computer vision conferences altogether. CV reviewers are just too likely to be clueless or hostile towards our brand of methods. Submitting our papers is just a waste of everyone's time (and incredibly demoralizingto my lab members)

I might come back in a few years, if at least two things change:

- Enough people in CV become interested in feature learning that the probability of getting a non-clueless and non-hostile reviewer is more than 50% (hopefully [Computer Vision Researcher]'s tutorial on the topic at CVPR will have some positive effect).

- CV conference proceedings become open access.

We intent to resubmit the paper to ICML, where we hope that it will fall in the hands of more informed and less negatively biased reviewers (not that ML reviewers are generally more informed or less biased, but they are just more informed about our kind of stuff). Regardless, I actually have a keynote talk at [Machine Learning Conference], where I'll be talking about the results in this paper.

Be assured that I am not blaming any of this on you as the CVPR program chair. I know you are doing your best within the traditional framework of CVPR.

I may also submit again to CV conferences if the reviewing process is fundamentally reformed so that papers are published before they get reviewed.

You are welcome to forward this message to whoever you want.

I hope to see you at NIPS or ICML.

Cheers,

-- [Author]

熊风(香港科技大学 计算机)回答:

前面的各个回答已经说得很好了。我结合自己这次ICCV (2017)的经历补充一下看法。

之前看到结果是borderline, weak reject, borderline的时候, 心都凉了。觉得这次肯定没戏了,一个accept也没有。自己当时心态上都有点破罐子破摔,rebuttal写的比较随便。但是@啥都不会

学长还是很认真地一字一句帮我改,指导我应该补充哪些地方,强调哪些地方。最后rebuttal果然奏效,paper中了。而且reviewer对我们的rebuttal给了好评: “The authors provided a sufficient rebuttal to solve most of my concerns.” “Two of the three reviewers recommend accept as a poster and agree that the rebuttal addresses their concerns.”

我觉得首先应该要认清reviewer的思路。

其实reviewer想拒你的理由,也不一定就是他给出的那些comments. 有时候可能就是做的东西不符合他的口味,就是想拒,但又找不出明显的硬伤,然后就挑一堆有的没的问题。让这种reviewer改意见感觉挺难的,他心目中对你论文的看法早就定了。

我这次投的ICCV,给weak reject的那个reviewer就是这种情况。提的一些问题根本不在点上,基本没有评论我的方法本身,净挑一些dataset, evaluation metrics的问题,尽管我们用的dataset, evaluation metrics是大家普遍都在用的。这个reviewer到最后也没修改意见,还是给了weak reject.

但其他两个给borderline的reviewer,提的一些问题就靠谱了很多。有的确实是我们论文的问题。而且从一些话里也可以感受到他们的态度也是摇摆不定的,比如“some issues in the paper should be fixed before being fully accepted”. 遇到这种情况,就要果断争取。我们rebuttal主要就是尽可能地把这两个reviewer的concerns解释清楚。最后这两个reviewer也修改了意见。

如果是论文没写好,比如遗漏了一些关键细节。大方承认,并且在rebuttal里面把这些细节展现出来,承诺在论文之后的版本会加上。

如果是reviewer对你的论文理解有问题,那就在rebuttal里面更加详细地解释。

如果确实指出了自己模型/实验的一些瑕疵,可以强调自己论文的亮点和contribution. 比如有个reviewer说"the proposed method cannot beat other state of the arts", 我们可以强调我们并没有像其他论文那样用ensemble的方法来提升performance,我们的focus是XXX. 比起performance的一些细微提升,XXX更重要。

上面就是自己的一些经验,分享给大家看看就好。可能也不一定正确,可能就是这次rebuttal我们运气不错而已。

最后祝大家都好运。

文章转载自知乎,著作权归属原作者

——The End——

分享

收藏

点赞

在看