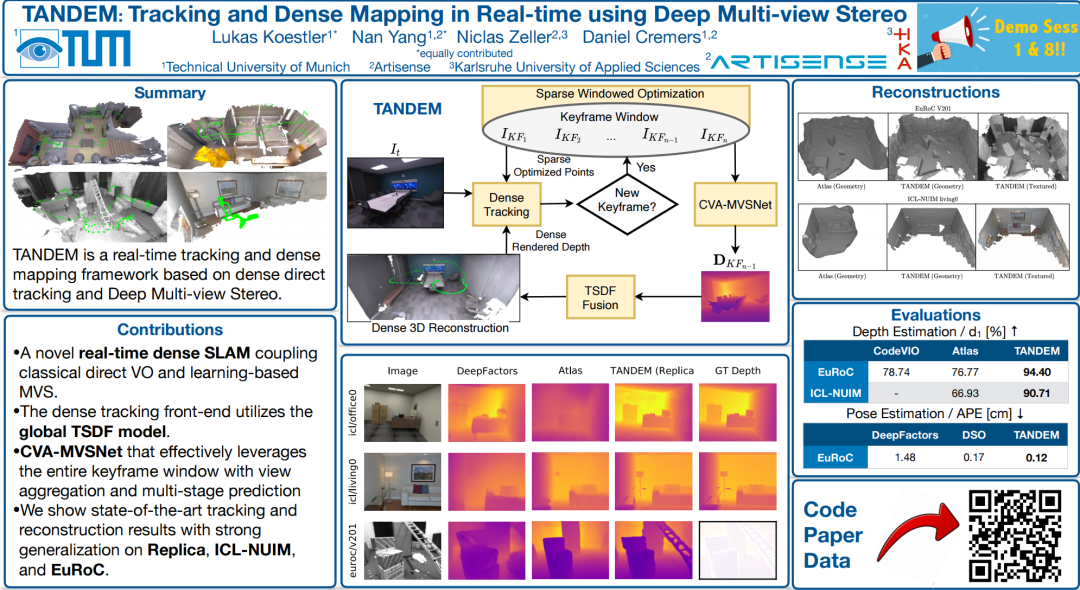

项目地址:https://vision.in.tum.de/research/vslam/tandem

论文地址:https://arxiv.org/pdf/2111.07418.pdf

源码地址:https://github.com/tum-vision/tandem

项目地址:https://vision.in.tum.de/research/monorec

论文地址:https://arxiv.org/pdf/2011.11814.pdf

源码地址:https://github.com/Brummi/MonoRec

项目地址:https://www.ipb.uni-bonn.de/research/,https://www.ipb.uni-bonn.de/data-software/

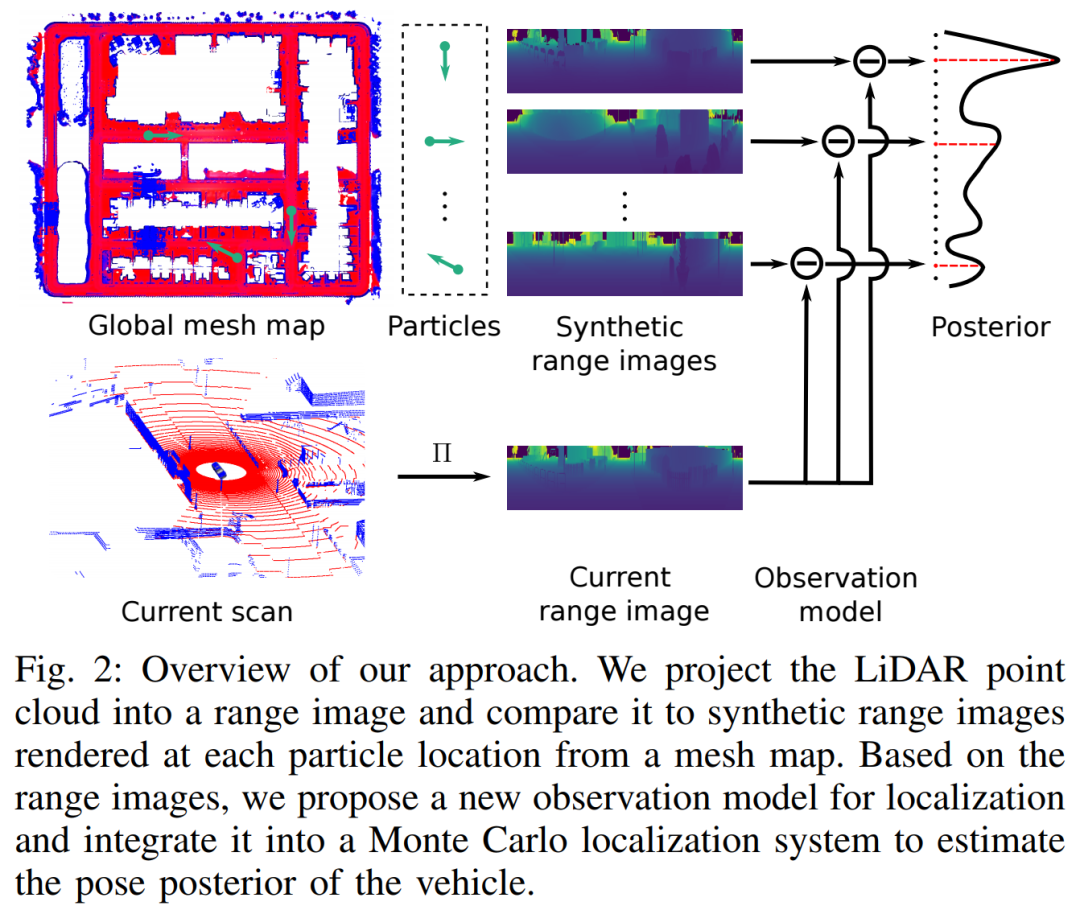

论文地址:https://arxiv.org/pdf/2105.12121.pdf

源码地址:https://github.com/PRBonn/range-mcl

项目地址:https://baug.ethz.ch/en/,https://www.hesaitech.com/zh/,https://sti.epfl.ch

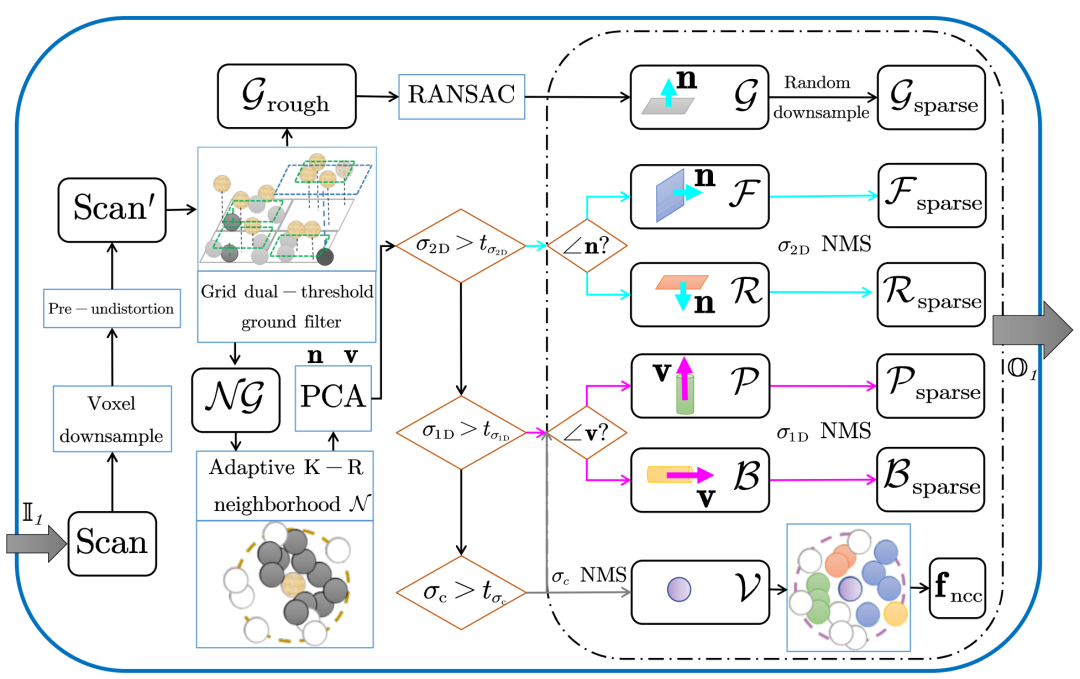

论文地址:https://arxiv.org/pdf/2102.03771.pdf

源码地址:https://github.com/YuePanEdward/MULLS

项目地址:https://isas.iar.kit.edu/

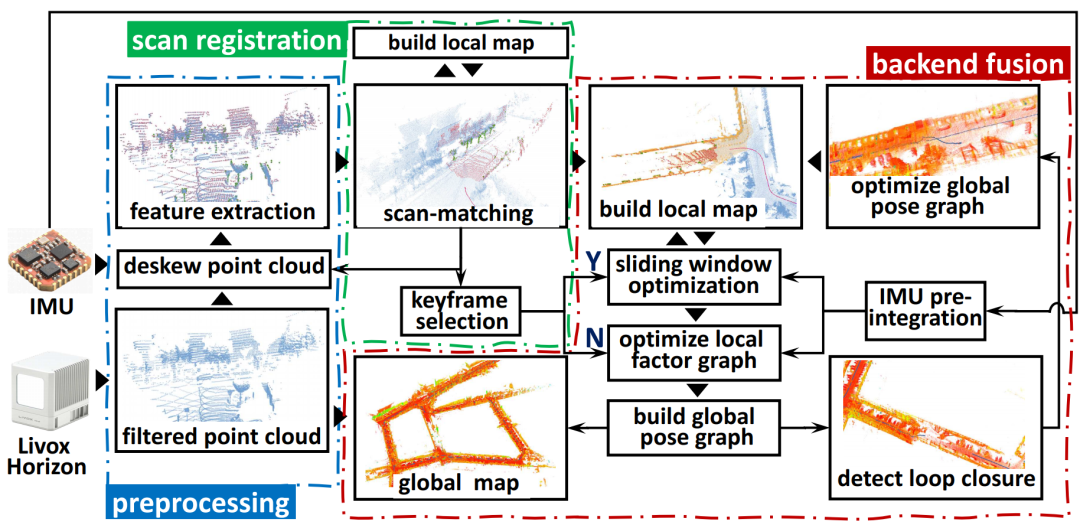

论文地址:https://arxiv.org/pdf/2010.13150v3

源码地址:https://github.com/KIT-ISAS/lili-om

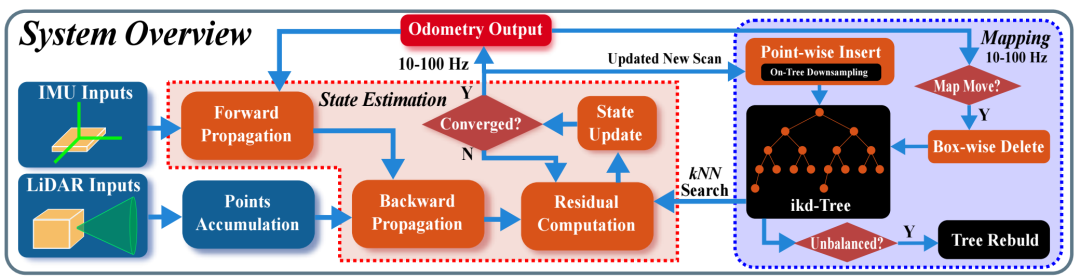

香港大学张富团队,在FAST-LIO(高效鲁棒性LiDAR、惯性里程库,融合LiDAR特征点和IIMU数据,紧耦合快速EKF迭代)基础上采用ikd-Tree(https://github.com/hku-mars/ikd-Tree)增量建图,原始LiDAR点直接计算里程,支持外部IMU,并支持ARM平台。

项目地址:https://mars.hku.hk

论文地址:https://arxiv.org/pdf/2107.06829v1.pdf,

源码地址:https://github.com/hku-mars/FAST_LIO

相关工作:

ikd-Tree: A state-of-art dynamic KD-Tree for 3D kNN search. https://github.com/hku-mars/ikd-Tree

IKFOM: A Toolbox for fast and high-precision on-manifold Kalman filter. https://github.com/hku-mars/IKFoM

UAV Avoiding Dynamic Obstacles: One of the implementation of FAST-LIO in robot's planning.https://github.com/hku-mars/dyn_small_obs_avoidance

R2LIVE: A high-precision LiDAR-inertial-Vision fusion work using FAST-LIO as LiDAR-inertial front-end.https://github.com/hku-mars/r2live

UGV Demo: Model Predictive Control for Trajectory Tracking on Differentiable Manifolds.https://www.youtube.com/watch?v=wikgrQbE6Cs

FAST-LIO-SLAM: The integration of FAST-LIO with Scan-Context loop closure module.https://github.com/gisbi-kim/FAST_LIO_SLAM

FAST-LIO-LOCALIZATION: The integration of FAST-LIO with Re-localization function module.https://github.com/HViktorTsoi/FAST_LIO_LOCALIZATION

项目地址:https://mars.hku.hk/

论文地址:https://arxiv.org/pdf/2109.07982.pdf

源码地址:https://github.com/hku-mars/r3live

相关工作:

FAST-LIO: A computationally efficient and robust LiDAR-inertial odometry package.https://github.com/hku-mars/FAST_LIO

ikd-Tree: A state-of-art dynamic KD-Tree for 3D kNN search.https://github.com/hku-mars/ikd-Tree LOAM-Livox: A robust LiDAR Odometry and Mapping (LOAM) package for Livox-LiDAR.https://github.com/hku-mars/loam_livox

openMVS: A library for computer-vision scientists and especially targeted to the Multi-View Stereo reconstruction community.https://github.com/cdcseacave/openMVS

VCGlib: An open source, portable, header-only Visualization and Computer Graphics Library.https://github.com/cnr-isti-vclab/vcglib

CGAL: A C++ Computational Geometry Algorithms Library.https://www.cgal.org/,https://github.com/CGAL/cgal

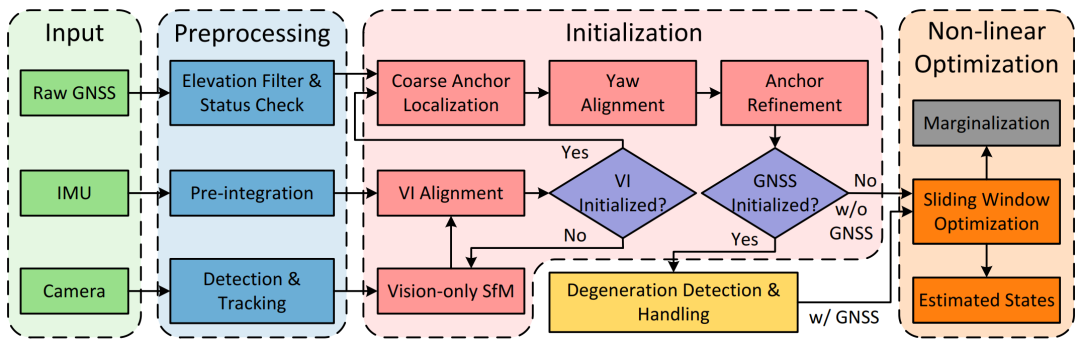

项目地址:https://uav.hkust.edu.hk

论文地址:https://arxiv.org/pdf/2103.07899.pdf

源码地址:https://github.com/HKUST-Aerial-Robotics/GVINS

相关资源:http://www.rtklib.com/ 系统框架及VIO部分采用VINS-Mono,相机建模采用camodocal(https://github.com/hengli/camodocal),ceres(http://ceres-solver.org/)优化。

RTKLIB: An Open Source Program Package for GNSS Positioning,An Open Source Program Package for GNSS Positioning

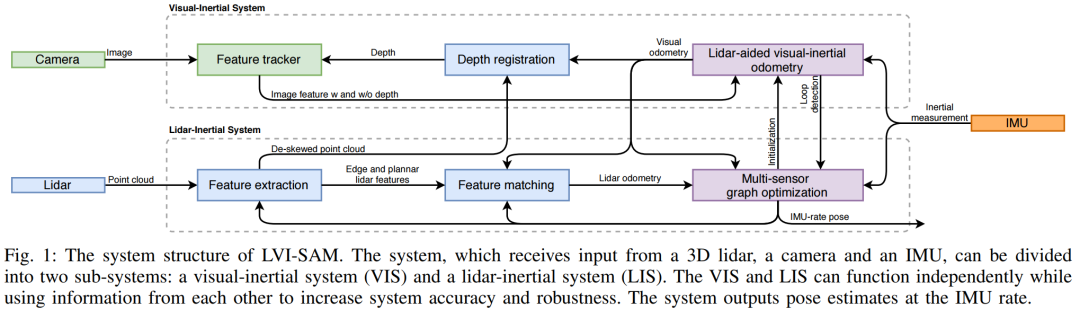

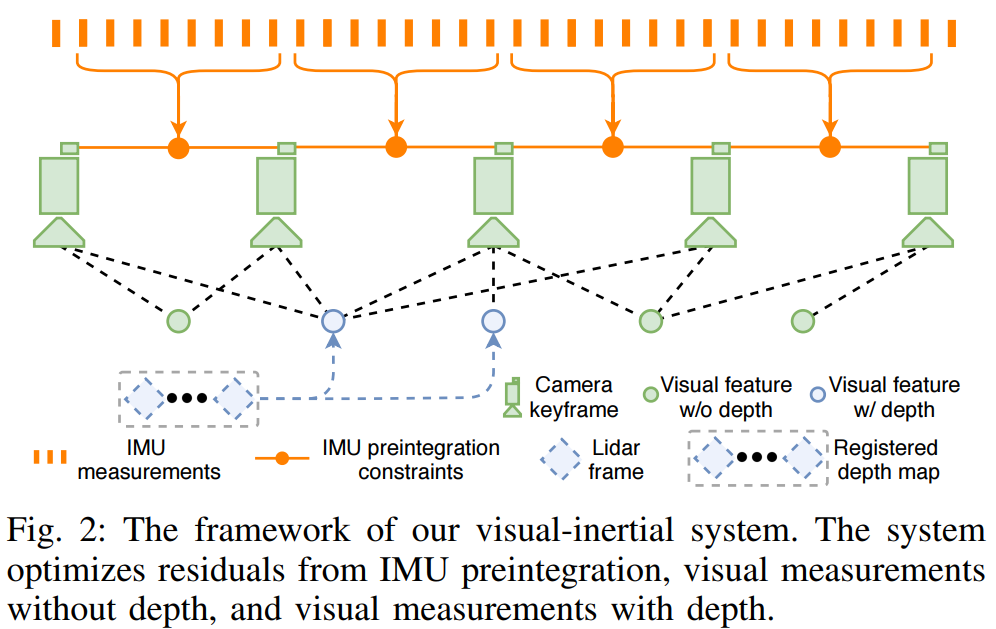

项目地址:https://git.io/lvi-sam,https://dusp.mit.edu/,https://senseable.mit.edu/,https://www.ams-institute.org/

论文地址:https://arxiv.org/pdf/2104.10831.pdf

源码地址:https://github.com/TixiaoShan/LVI-SAM

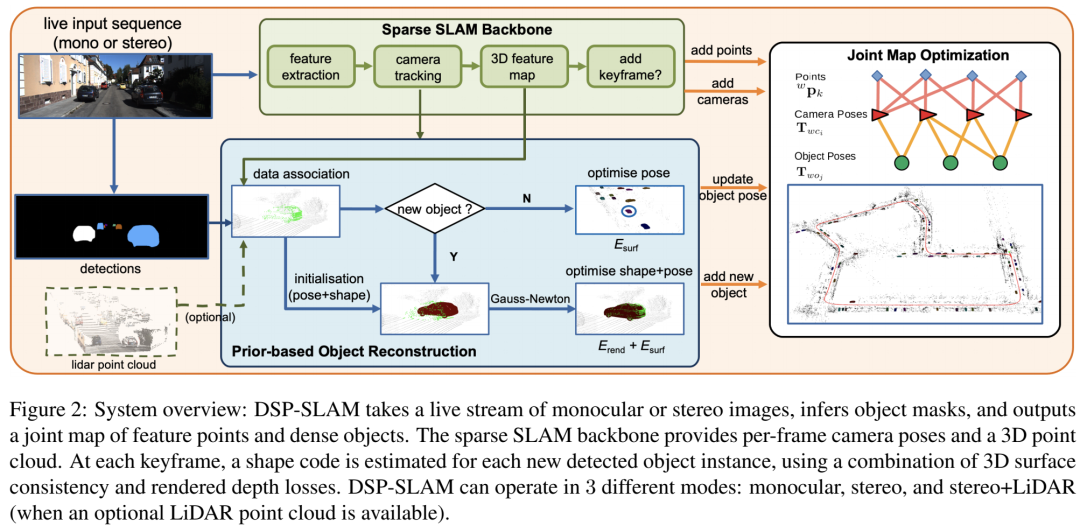

项目地址:https://jingwenwang95.github.io/dsp-slam

论文地址:https://arxiv.org/pdf/2108.09481v2.pdf

源码地址:https://github.com/JingwenWang95/DSP-SLAM

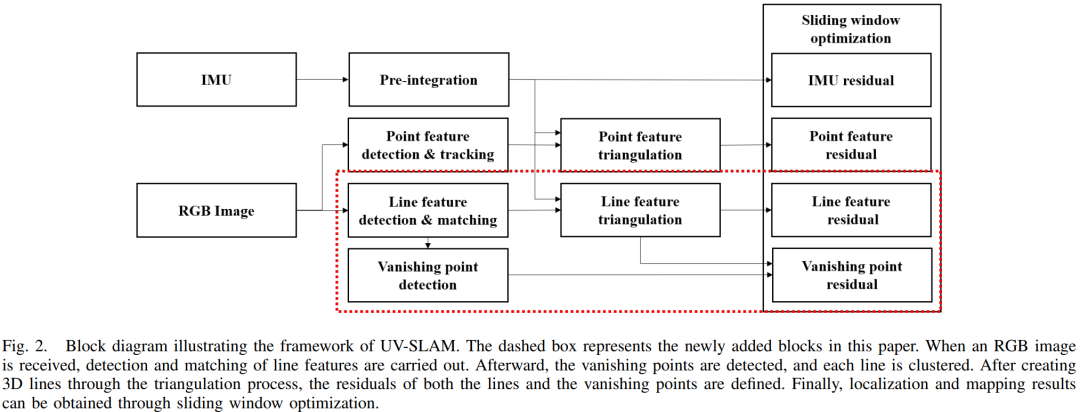

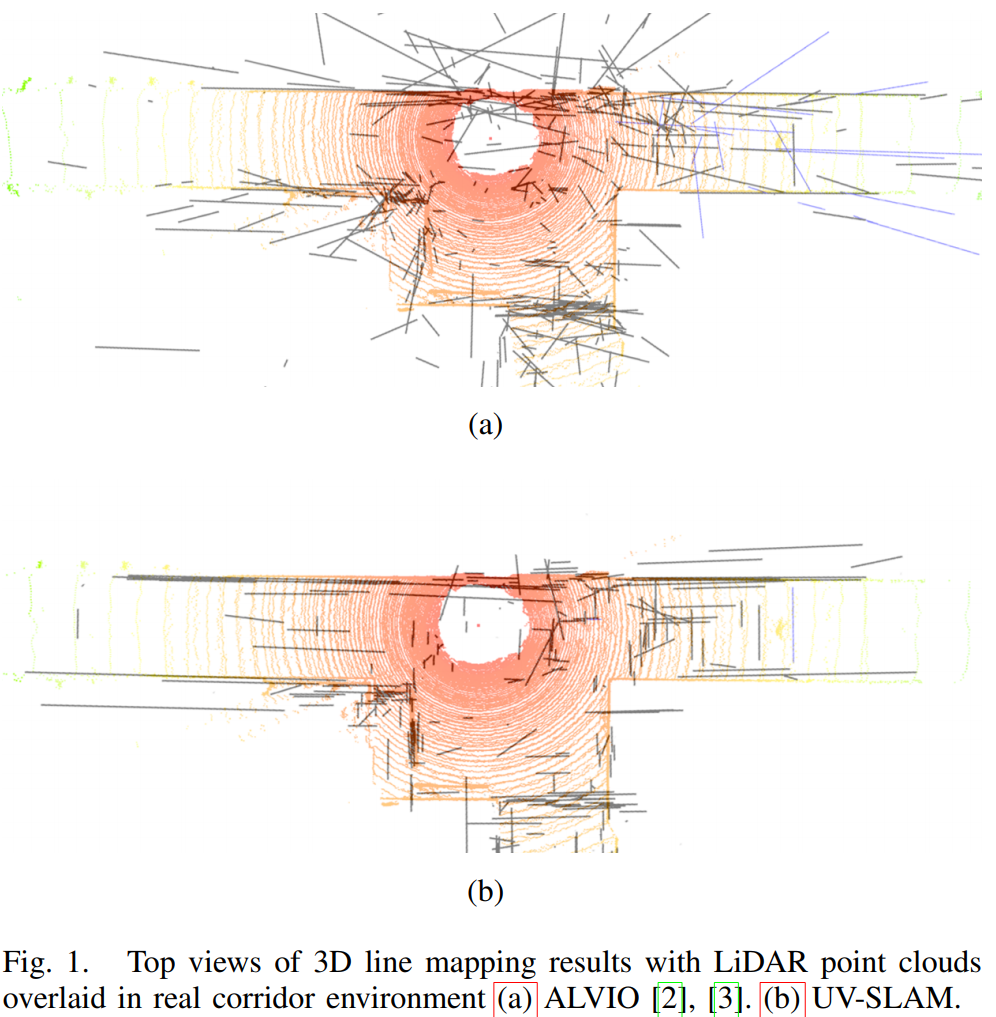

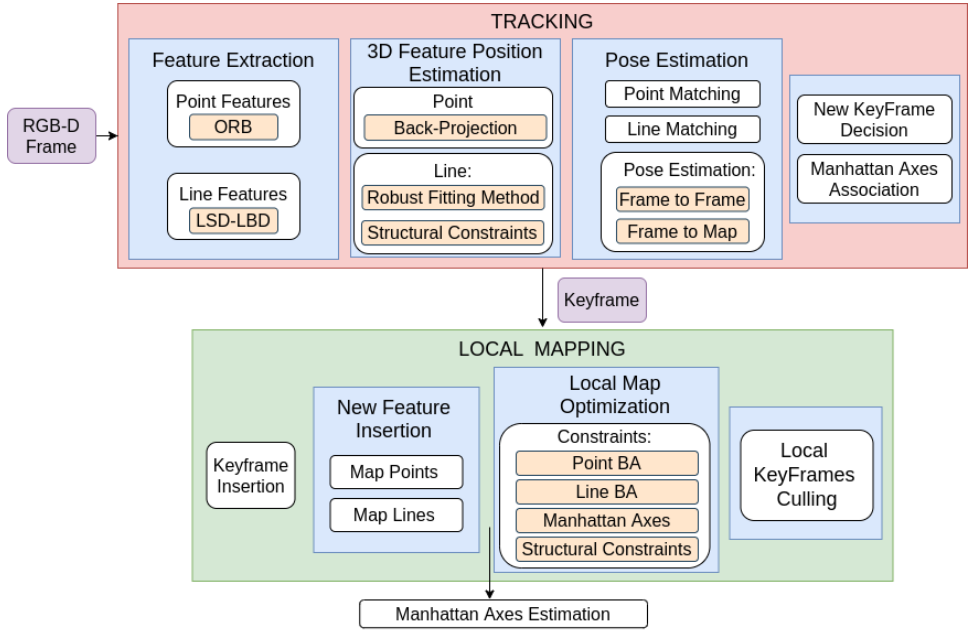

论文地址:https://arxiv.org/pdf/2112.13515.pdf

源码地址:https://github.com/url-kaist/UV-SLAM,源码即将上传

相关研究:Avoiding Degeneracy for Monocular Visual SLAM with Point and Line Features

ALVIO: Adaptive Line and Point Feature-Based Visual Inertial Odometry for Robust Localization in Indoor Environments,源码未上传https://github.com/ankh88324/ALVIO

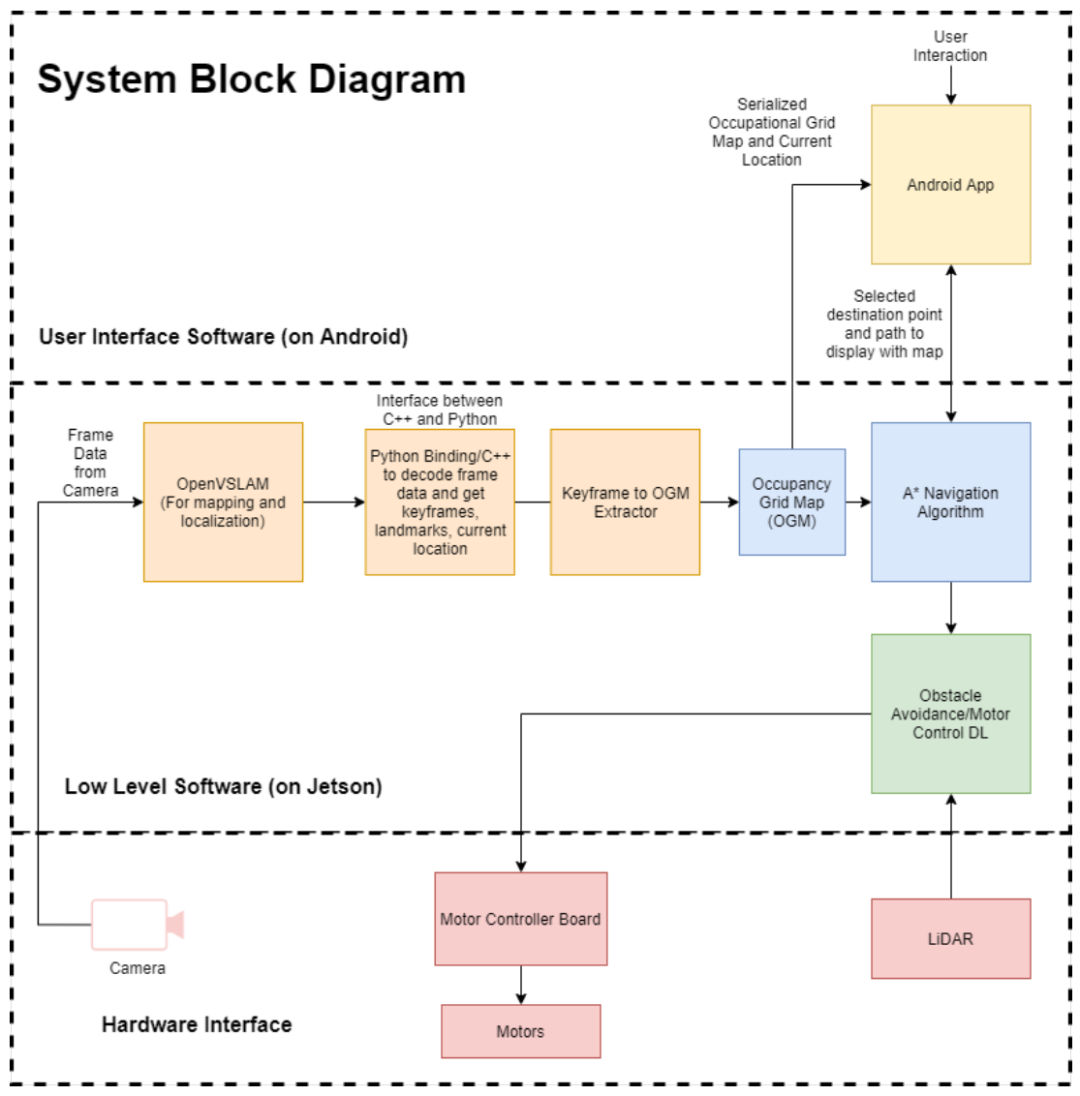

项目地址: 无

论文地址:https://arxiv.org/pdf/2112.07723.pdf

源码地址:https://github.com/michealcarac/VSLAM-Mapping

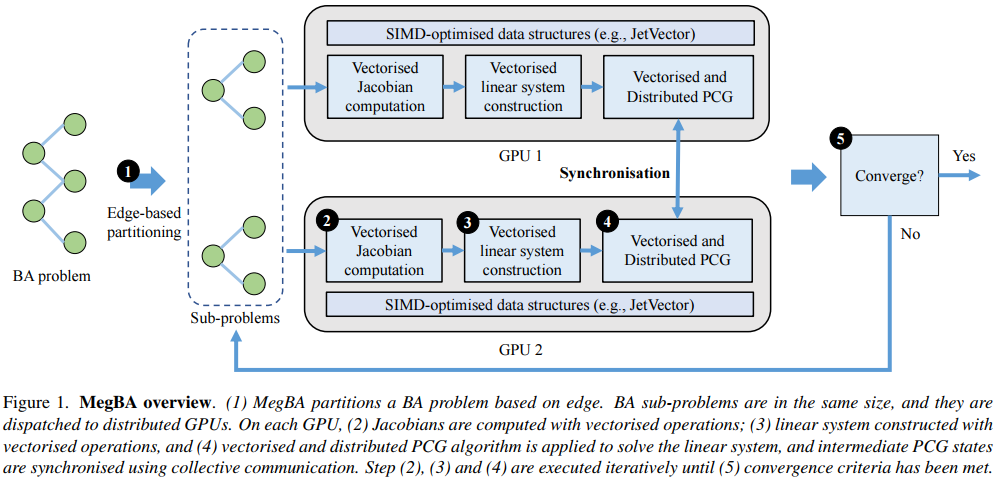

项目地址: 无

论文地址:https://arxiv.org/pdf/2112.01349v2.pdf

源码地址:https://github.com/MegviiRobot/MegBA

项目地址: 无

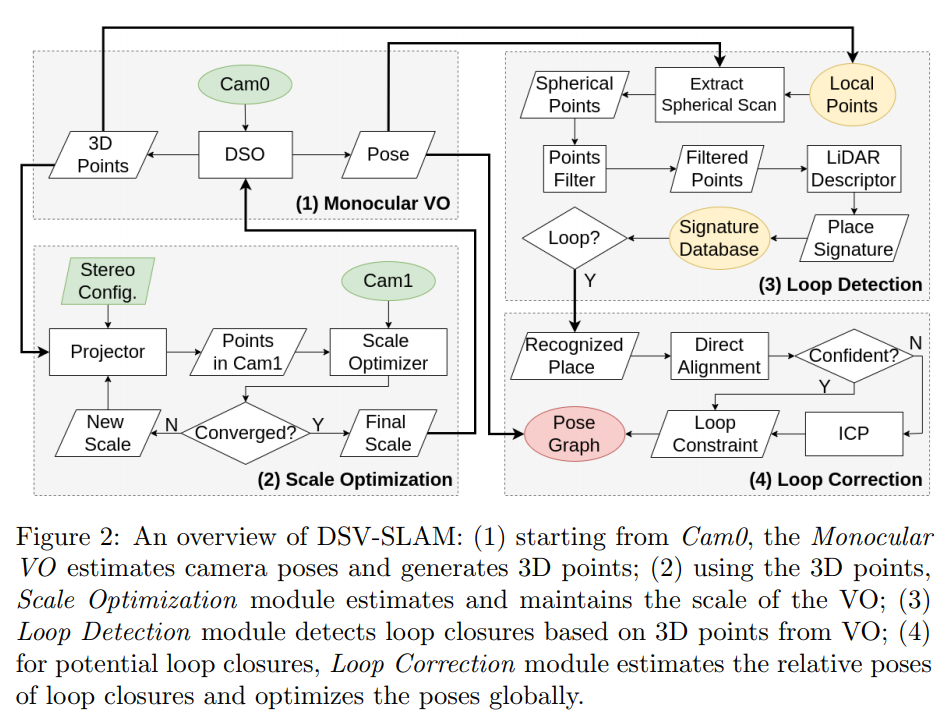

论文地址:https://arxiv.org/pdf/2112.01890.pdf

源码地址:https://github.com/IRVLab/direct_stereo_slam

相关工作:Direct Sparse Odometry,A Photometrically Calibrated Benchmark For Monocular Visual Odometry,https://github.com/JakobEngel/dso

项目地址:无

论文地址:https://arxiv.org/pdf/2111.03408.pdf

源码地址:https://github.com/joanpepcompany/MSC-VO

来源:知乎@冰颖机器人(已授权)

编辑:计算机视觉SLAM

3D视觉精品课程推荐:

3.彻底搞透视觉三维重建:原理剖析、代码讲解、及优化改进

4.国内首个面向工业级实战的点云处理课程

5.激光-视觉-IMU-GPS融合SLAM算法梳理和代码讲解

6.彻底搞懂视觉-惯性SLAM:基于VINS-Fusion正式开课啦

7.彻底搞懂基于LOAM框架的3D激光SLAM: 源码剖析到算法优化

8.彻底剖析室内、室外激光SLAM关键算法原理、代码和实战(cartographer+LOAM +LIO-SAM)

重磅!3DCVer-学术论文写作投稿 交流群已成立

扫码添加小助手微信,可申请加入3D视觉工坊-学术论文写作与投稿 微信交流群,旨在交流顶会、顶刊、SCI、EI等写作与投稿事宜。

同时也可申请加入我们的细分方向交流群,目前主要有3D视觉、CV&深度学习、SLAM、三维重建、点云后处理、自动驾驶、多传感器融合、CV入门、三维测量、VR/AR、3D人脸识别、医疗影像、缺陷检测、行人重识别、目标跟踪、视觉产品落地、视觉竞赛、车牌识别、硬件选型、学术交流、求职交流、ORB-SLAM系列源码交流、深度估计等微信群。

一定要备注:研究方向+学校/公司+昵称,例如:”3D视觉 + 上海交大 + 静静“。请按照格式备注,可快速被通过且邀请进群。原创投稿也请联系。

▲长按加微信群或投稿

▲长按关注公众号

▲长按关注公众号

3D视觉从入门到精通知识星球:针对3D视觉领域的视频课程(三维重建系列、三维点云系列、结构光系列、手眼标定、相机标定、激光/视觉SLAM、自动驾驶等)、知识点汇总、入门进阶学习路线、最新paper分享、疑问解答五个方面进行深耕,更有各类大厂的算法工程人员进行技术指导。与此同时,星球将联合知名企业发布3D视觉相关算法开发岗位以及项目对接信息,打造成集技术与就业为一体的铁杆粉丝聚集区,近4000星球成员为创造更好的AI世界共同进步,知识星球入口:

学习3D视觉核心技术,扫描查看介绍,3天内无条件退款

圈里有高质量教程资料、答疑解惑、助你高效解决问题 觉得有用,麻烦给个赞和在看~