点击上方“3D视觉工坊”,选择“星标”

干货第一时间送达

引导语:本文为“3D视觉创新应用(三维重建)竞赛”作品集系列之一,该作品由来自中国农业大学团队完成,团队成员:陆杰、苏杨、都敖。全文约2054个字,阅读时长约5分钟,旨在为更多开发者提供学习参考。

一、环境配置

奥比中光OpenNISDK安装(Linux)

OpenNI

------

Website: http://structure.io/openni

Building Prerequisites(前置依赖)

======================

Linux

-----

- GCC 4.x

From: http://gcc.gnu.org/releases.html

Or via apt:

sudo apt-get install g++

- Python 2.6+/3.x

From: http://www.python.org/download/

Or via apt:

sudo apt-get install python

- LibUSB 1.0.x

From: http://sourceforge.net/projects/libusb/files/libusb-1.0/

Or via apt:

sudo apt-get install libusb-1.0-0-dev

- LibUDEV

sudo apt-get install libudev-dev

- JDK 6.0

From: http://www.oracle.com/technetwork/java/javase/downloads/jdk-6u32-downloads-1594644.html

Or via apt:

sudo apt-get install openjdk-6-jdk

- FreeGLUT3

From: http://freeglut.sourceforge.net/index.php#download

Or via apt:

sudo apt-get install freeglut3-dev

- Doxygen

From: http://www.stack.nl/~dimitri/doxygen/download.html#latestsrc

Or via apt:

sudo apt-get install doxygen

- GraphViz

From: http://www.graphviz.org/Download_linux_ubuntu.php

Or via apt:

sudo apt-get install graphviz

orbbec@localhost:OpenNI$ ./install.sh

sudo ./NiViewer

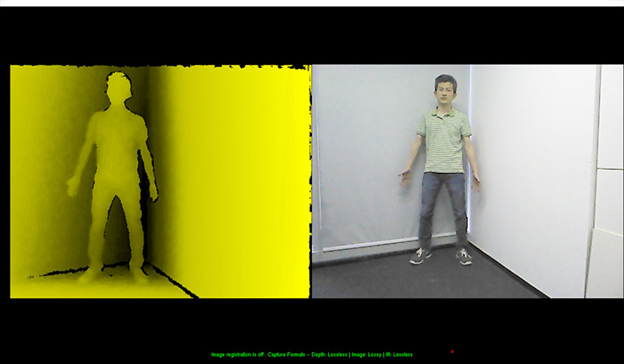

结果图片

OpenCV在Arm平台上编译

源文件官方地址,本文用的是OpenCV3.4.3版本。OpenCV编译的方法可通过百度搜索,编译完成后对orbbec中OpenNI2SDK和opencv进行配置。

OpenCV+OpenNI2配置

Includes

CFLAGS = ${shell pkg-config opencv --cflags}

#lib

LDFLAGS = ${shell pkg-config opencv --libs}

opencv环境配置没有问题后,在orbbec提供的OpenNI2的文件中示例代码中进行整合开发。文件位置在:

/OpenNI/Samples/

#Includes

CFLAGS += ${shell pkg-config opencv --cflags}

#lib

LDFLAGS += ${shell pkg-config opencv --libs}

二、数据获取

首先,“Makefile”文件内容进行修改,第3、4行为添加部分,内容是官方OpenNI2的相关文件路径:

include CommonDefs.mak

BIN_DIR = Bin

OPENNI2_INCLUDE=/home/orbbec/Desktop/OpenNI-Linux-Arm64-2.3.0.65/OpenNI-Linux-Arm64-2.3.0.65/include/

OPENNI2_REDIST=/home/orbbec/Desktop/OpenNI-Linux-Arm64-2.3.0.65/OpenNI-Linux-Arm64-2.3.0.65/Redist/

INC_DIRS = \

../../Include \

../../ThirdParty/GL/ \

../Common

SRC_FILES = *.cpp

ifeq ("$(OSTYPE)","Darwin")

CFLAGS += -DMACOS

LDFLAGS += -framework OpenGL -framework GLUT

else

CFLAGS += -DUNIX -DGLX_GLXEXT_LEGACY

USED_LIBS += glut GL

endif

USED_LIBS += OpenNI2

EXE_NAME = SimpleViewer

CFLAGS += -Wall

ifndef OPENNI2_INCLUDE

$(error OPENNI2_INCLUDE is not defined. Please define it or 'source' the OpenNIDevEnvironment file from the installation)

else ifndef OPENNI2_REDIST

$(error OPENNI2_REDIST is not defined. Please define it or 'source' the OpenNIDevEnvironment file from the installation)

endif

INC_DIRS += $(OPENNI2_INCLUDE)

include CommonCppMakefile

.PHONY: copy-redist

copy-redist:

cp -R $(OPENNI2_REDIST)/* $(OUT_DIR)

$(OUTPUT_FILE): copy-redist

# take this file's dir

COMMON_CPP_MAKE_FILE_DIR = $(dir $(lastword $(MAKEFILE_LIST)))

include $(COMMON_CPP_MAKE_FILE_DIR)CommonDefs.mak

# define a function to figure .o file for each source file (placed under intermediate directory)

SRC_TO_OBJ = $(addprefix ./$(INT_DIR)/,$(addsuffix .o,$(notdir $(basename $1))))

# create a list of all object files

OBJ_FILES = $(call SRC_TO_OBJ,$(SRC_FILES_LIST))

# define a function to translate any source file to its dependency file (note that the way we create

# dep files, as a side affect of compilation, always puts the files in the INT_DIR with suffix .d)

SRC_TO_DEP = $(addprefix ./$(INT_DIR)/,$(addsuffix .d,$(notdir $(basename $1))))

# create a list of all dependency files

DEP_FILES = $(call SRC_TO_DEP,$(SRC_FILES_LIST))

# older version of gcc doesn't support the '=' symbol in include dirs, so we replace it ourselves with sysroot

INC_DIRS_FROM_SYSROOT = $(patsubst =/%,$(TARGET_SYS_ROOT)/%,$(INC_DIRS))

# append the -I switch to each include directory

INC_DIRS_OPTION = $(foreach dir,$(INC_DIRS_FROM_SYSROOT),-I$(dir))

# append the -L switch to each library directory

LIB_DIRS_OPTION = $(foreach dir,$(LIB_DIRS),-L$(dir)) -L$(OUT_DIR)

# append the -l switch to each library used

USED_LIBS_OPTION = $(foreach lib,$(USED_LIBS),-l$(lib))

# append the -D switch to each define

DEFINES_OPTION = $(foreach def,$(DEFINES),-D$(def))

# tell compiler to use the target system root

ifdef TARGET_SYS_ROOT

CFLAGS += --sysroot=$(TARGET_SYS_ROOT)

LDFLAGS += --sysroot=$(TARGET_SYS_ROOT)

endif

# set Debug / Release flags

ifeq "$(CFG)" "Debug"

CFLAGS += -O0 -g

endif

ifeq "$(CFG)" "Release"

CFLAGS += -O2 -DNDEBUG

endif

CFLAGS += $(INC_DIRS_OPTION) $(DEFINES_OPTION)

CFLAGS += -fPIC -fvisibility=hidden

ifneq "$(ALLOW_WARNINGS)" "1"

CFLAGS += -Werror

ifeq ("$(OSTYPE)","Darwin")

CFLAGS += -Wno-deprecated-declarations -Wno-unused-private-field -Wno-unused-const-variable

endif

endif

LDFLAGS += $(LIB_DIRS_OPTION) $(USED_LIBS_OPTION)

##############this is out method######################

CFLAGS += ${shell pkg-config opencv --cflags}

LDFLAGS += ${shell pkg-config opencv --libs}

#####################################

# some lib / exe specifics

ifneq "$(LIB_NAME)" ""

OUTPUT_NAME = lib$(LIB_NAME).so

ifneq ("$(OSTYPE)","Darwin")

LDFLAGS += -Wl,--no-undefined

OUTPUT_NAME = lib$(LIB_NAME).so

OUTPUT_COMMAND = $(CXX) -o $(OUTPUT_FILE) $(OBJ_FILES) $(LDFLAGS) -shared

else

LDFLAGS += -undefined error

OUTPUT_NAME = lib$(LIB_NAME).dylib

OUTPUT_COMMAND = $(CXX) -o $(OUTPUT_FILE) $(OBJ_FILES) $(LDFLAGS) -dynamiclib -headerpad_max_install_names -install_name $(OUTPUT_NAME)

endif

endif

ifneq "$(EXE_NAME)" ""

OUTPUT_NAME = $(EXE_NAME)

# We want the executables to look for the .so's locally first:

LDFLAGS += -Wl,-rpath ./

OUTPUT_COMMAND = $(CXX) -o $(OUTPUT_FILE) $(OBJ_FILES) $(LDFLAGS)

endif

ifneq "$(SLIB_NAME)" ""

OUTPUT_NAME = lib$(SLIB_NAME).a

ifneq ("$(OSTYPE)","Darwin")

OUTPUT_COMMAND = $(AR) -cq $(OUTPUT_FILE) $(OBJ_FILES)

else

OUTPUT_COMMAND = libtool -static -o $(OUTPUT_FILE) $(OBJ_FILES)

endif

endif

define CREATE_SRC_TARGETS

# create a target for the object file (the CXX command creates both an .o file

# and a .d file)

ifneq ("$(OSTYPE)","Darwin")

$(call SRC_TO_OBJ,$1) : $1 | $(INT_DIR)

$(CXX) -MD -MP -MT "$(call SRC_TO_DEP,$1) $$@" -c $(CFLAGS) -o $$@ $$<

else

$(call SRC_TO_OBJ,$1) : $1 | $(INT_DIR)

$(CXX) -x c++ -c $(CFLAGS) -o $$@ $$<

endif

endef

#############################################################################

# Targets

#############################################################################

.PHONY: clean-objs clean-defs

include $(COMMON_CPP_MAKE_FILE_DIR)CommonTargets.mak

# create targets for each source file

$(foreach src,$(SRC_FILES_LIST),$(eval $(call CREATE_SRC_TARGETS,$(src))))

# include all dependency files (we don't need them the first time, so we can use -include)

-include $(DEP_FILES)

$(OUTPUT_FILE): $(OBJ_FILES)

$(OUTPUT_COMMAND)

clean-objs:

rm -rf $(OBJ_FILES)

clean-defs:

rm -rf $(DEP_FILES)

clean: clean-objs clean-defs

获取深度图

#include <OpenNI.h>

#include "Viewer.h"

#include <stdio.h>

#include <iostream>

#include <highgui.h>

#include <opencv2/opencv.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

using namespace std;

using namespace openni;

#define SAMPLE_READ_WAIT_TIMEOUT 2000 //2000ms

int wasKeyboardHit();

int main(int argc, char** argv)

{

openni::Status rc = openni::STATUS_OK;

openni::Status rc_d = openni::STATUS_OK;

openni::Device device;

openni::VideoStream depth, color;

const char* deviceURI = openni::ANY_DEVICE;

rc = openni::OpenNI::initialize();

printf("After initialization:\n%s\n", openni::OpenNI::getExtendedError());

rc = device.open(deviceURI);

if (rc != openni::STATUS_OK)

{

printf("SimpleViewer: Device open failed:\n%s\n", openni::OpenNI::getExtendedError());

openni::OpenNI::shutdown();

return 1;

}

rc = depth.create(device, openni::SENSOR_DEPTH);

if (rc == openni::STATUS_OK)

rc = depth.start();

openni::VideoFrameRef frameDepth;

openni::DepthPixel* pDepth;

cout<<"stream start, press any key to stop..."<<endl;

VideoStream* streams[] = {&depth, &color};

while (!wasKeyboardHit())

{

int depthstream = -1;

rc = OpenNI::waitForAnyStream(streams, 2, &depthstream, SAMPLE_READ_WAIT_TIMEOUT);

if (rc != STATUS_OK)

{

printf("Wait failed! (timeout is %d ms)\n%s\n", SAMPLE_READ_WAIT_TIMEOUT, OpenNI::getExtendedError());

continue;

}

cout<<"waitForAnyStream finished, press any key to stop..."<<endl;

//get color frame

rc_d = depth.readFrame(&frameDepth);

cout<<"read Frame finished, press any key to stop..."<<endl;

if (rc != STATUS_OK || rc_d != STATUS_OK)

{

printf("Read failed!\n%s\n", OpenNI::getExtendedError());

continue;

}

int middleIndex = (frameDepth.getHeight() + 1)*frameDepth.getWidth() / 2;

pDepth = (DepthPixel*)frameDepth.getData();

cout << pDepth[middleIndex] <<endl;

}

const cv::Mat mImageDepth( frameDepth.getHeight(), frameDepth.getWidth(), CV_16UC1, (void*)frameDepth.getData());

cv::Mat mScaledDepth;

int iMaxDepth = depth.getMaxPixelValue();

mImageDepth.convertTo( mScaledDepth, CV_8U, 255.0 / iMaxDepth );

cv::imwrite( "/home/orbbec/Desktop/createDepthImg/Bin/Arm64-Release/DepthImage.png", mScaledDepth );

}

获取彩色图

#include <opencv2/opencv.hpp>

using namespace cv;

int main()

{

VideoCapture videoCapture;

videoCapture.open(cv::CAP_ANY);

if (!videoCapture.isOpened())

{

printf("open UVC color camera failed.");

}

//set codec format

videoCapture.set(CV_CAP_PROP_FOURCC, CV_FOURCC('M', 'J', 'P', 'G'));

//set resolution

videoCapture.set(CV_CAP_PROP_FRAME_WIDTH, 640);

videoCapture.set(CV_CAP_PROP_FRAME_HEIGHT, 480);

for(int i=0;i<10;i++)

{

Mat frame;

videoCapture >> frame;

Vec3b &bgr = frame.at<Vec3b>(frame.rows/2, frame.cols/2);

//print the r g b value of the middle pixel of the frame

printf("r = %02d, g = %02d, b = %02d\n",

bgr[2] & 0xff,

bgr[1] & 0xff,

bgr[0] & 0xff);

waitKey(30); //delay 30ms

imwrite("/home/orbbec/Desktop/saveRGBImg/Bin/Arm64-Release/saved_img.png",frame);

}

return 0;

}

获取彩色点云

#include <iostream>

#include <sstream>

#include "OpenNI.h"

#include "OniSampleUtilities.h"

#include <opencv2/opencv.hpp>

using namespace openni;

using namespace cv;

#define MIN_DISTANCE 20 //µ¥Î»ºÁÃ×

#define MAX_DISTANCE 4000 //µ¥Î»ºÁÃ×

#define RESOULTION_X 640.0 //±ê¶šÊ±µÄ·Ö±æÂÊ

#define RESOULTION_Y 480.0 //±ê¶šÊ±µÄ·Ö±æÂÊ

#define MAX_FRAME_COUNT 50

typedef struct xnIntrinsic_Params

{

xnIntrinsic_Params() :

c_x(320.0), c_y(240.0), f_x(480.0), f_y(480.0)

{}

xnIntrinsic_Params(float c_x_, float c_y_, float f_x_, float f_y_) :

c_x(c_x_), c_y(c_y_), f_x(f_x_),f_y(f_y_)

{}

float c_x; //uÖáÉϵĹéÒ»»¯œ¹Ÿà

float c_y; //vÖáÉϵĹéÒ»»¯œ¹Ÿà

float f_x; //Ö÷µãx×ø±ê

float f_y; //Ö÷µãy×ø±ê

}xIntrinsic_Params;

xIntrinsic_Params g_IntrinsicParam; //ŽæŽ¢Ïà»úÄڲεÄÈ«ŸÖ±äÁ¿

void getCameraParams(openni::Device& Device, xIntrinsic_Params& IrParam)

{

OBCameraParams cameraParam;

int dataSize = sizeof(cameraParam);

memset(&cameraParam, 0, sizeof(cameraParam));

openni::Status rc = Device.getProperty(openni::OBEXTENSION_ID_CAM_PARAMS, (uint8_t *)&cameraParam, &dataSize);

if (rc != openni::STATUS_OK)

{

std::cout << "Error:" << openni::OpenNI::getExtendedError() << std::endl;

return;

}

std::cout << "IrParam.f_x = " << IrParam.f_x << std::endl;

std::cout << "IrParam.f_y = " << IrParam.f_y << std::endl;

std::cout << "IrParam.c_x = " << IrParam.c_x << std::endl;

std::cout << "IrParam.c_y = " << IrParam.c_y << std::endl;

}

void convertDepthToPointCloud(const uint16_t *pDepth, Mat color, int width, int height,const char *ply_filename)

{

if (NULL == pDepth)

{

printf("depth frame is NULL!");

return;

}

FILE *fp;

fp = fopen(ply_filename, "w");

int valid_count = 0;

uint16_t max_depth = MAX_DISTANCE;

uint16_t min_depth = MIN_DISTANCE;

for (int v = 0; v < height; ++v)

{

for (int u = 0; u < width; ++u)

{

uint16_t depth = pDepth[v * width + u];

if (depth <= 0 || depth < min_depth || depth > max_depth)

continue;

valid_count += 1;

}

}

fprintf(fp, "ply\n");

fprintf(fp, "format ascii 1.0\n");

fprintf(fp, "element vertex %d\n", valid_count);

fprintf(fp, "property float x\n");

fprintf(fp, "property float y\n");

fprintf(fp, "property float z\n");

fprintf(fp, "property uchar red\n");

fprintf(fp, "property uchar green\n");

fprintf(fp, "property uchar blue\n");

fprintf(fp, "end_header\n");

float world_x, world_y, world_z;

for (int v = 0; v < height; ++v)

{

for (int u = 0; u < width; ++u)

{

uint16_t depth = pDepth[v * width + u];

Vec3b &bgr = color.at<Vec3b>(v, u);

int color_b= bgr[0] & 0xff;

int color_g= bgr[1] & 0xff;

int color_r= bgr[2] & 0xff;

if (depth <= 0 || depth < min_depth || depth > max_depth)

continue;

float fdx = g_IntrinsicParam.f_x * ((float)(width) / RESOULTION_X);

float fdy = g_IntrinsicParam.f_y * ((float)(height) / RESOULTION_Y);

float u0 = g_IntrinsicParam.c_x * ((float)(width)/ RESOULTION_X);

float v0 = g_IntrinsicParam.c_y * ((float)(height) / RESOULTION_Y);

float tx = (u - u0) / fdx;

float ty = (v - v0) / fdy;

world_x = depth * tx;

world_y = depth * ty;

world_z = depth;

fprintf(fp, "%f %f %f %d %d %d\n", world_x, world_y, world_z, color_r,color_g,color_b);

}

}

fclose(fp);

}

int g_imageCount = 0;

void analyzeFrame(const VideoFrameRef& frame, Mat color)

{

DepthPixel* pDepth;

//¹¹ÔìµãÔÆÎÄŒþÃû

char plyFileName[256] = "";

g_imageCount++;

if (MAX_FRAME_COUNT < g_imageCount)

{

return;

}

std::stringstream filename;

filename << "pointcloud_";

filename << g_imageCount;

filename << ".ply";

filename >> plyFileName;

int middleIndex = (frame.getHeight() + 1)*frame.getWidth() / 2;

switch (frame.getVideoMode().getPixelFormat())

{

case PIXEL_FORMAT_DEPTH_1_MM:

pDepth = (DepthPixel*)frame.getData();

printf("[%08llu] %8d\n", (long long)frame.getTimestamp(),pDepth[middleIndex]);

convertDepthToPointCloud(pDepth, color, frame.getWidth(), frame.getHeight(), plyFileName);

break;

default:

printf("Unknown format\n");

}

}

int main(int argc, char* argv[])

{

//initialize openNI sdk

Status rc = OpenNI::initialize();

if (rc != STATUS_OK)

{

printf("Initialize failed\n%s\n", OpenNI::getExtendedError());

return 1;

}

//open deivce

Device device;

rc = device.open(ANY_DEVICE);

if (rc != STATUS_OK)

{

printf("Couldn't open device\n%s\n", OpenNI::getExtendedError());

return 2;

}

VideoCapture videoCapture;

videoCapture.open(cv::CAP_ANY);

if (!videoCapture.isOpened())

{

printf("open UVC color camera failed.");

}

//set codec format

videoCapture.set(CV_CAP_PROP_FOURCC, CV_FOURCC('M', 'J', 'P', 'G'));

//set resolution

videoCapture.set(CV_CAP_PROP_FRAME_WIDTH, 640);

videoCapture.set(CV_CAP_PROP_FRAME_HEIGHT, 480);

VideoStream depth;

//create depth stream

if (device.getSensorInfo(SENSOR_DEPTH) != NULL)

{

rc = depth.create(device, SENSOR_DEPTH);

if (rc != STATUS_OK)

{

printf("Couldn't create depth stream\n%s\n", OpenNI::getExtendedError());

}

}

rc = depth.start();

if (rc != STATUS_OK)

{

printf("Couldn't start the depth stream\n%s\n", OpenNI::getExtendedError());

}

openni::VideoFrameRef frameDepth;

for(int i=0;i<10;i++)

{

Mat color_frame;

videoCapture >> color_frame;

Vec3b &bgr = color_frame.at<Vec3b>(color_frame.rows/2, color_frame.cols/2);

//print the r g b value of the middle pixel of the frame

printf("r = %02d, g = %02d, b = %02d\n",

bgr[2] & 0xff,

bgr[1] & 0xff,

bgr[0] & 0xff);

rc = depth.readFrame(&frameDepth);

analyzeFrame(frameDepth, color_frame);

if (rc != openni::STATUS_OK)

continue;

waitKey(30);

}

depth.stop();

depth.destroy();

//close device

device.close();

//shutdown OpenNI

OpenNI::shutdown();

return 0;

三、数据配准

配准代码

#include <iostream>

#include <string>

#include <pcl/io/ply_io.h>

#include <pcl/registration/icp.h>

#include <pcl/visualization/pcl_visualizer.h>

#include <pcl/console/time.h> // TicToc

#include <pcl/common/common.h>

#include <pcl/common/angles.h>

#include <pcl/common/transforms.h>

#include <pcl/point_cloud.h>

#include <pcl/point_types.h>

#include <pcl/io/pcd_io.h>

#include <pcl/registration/transformation_estimation_svd.h>

typedef pcl::PointXYZRGB PointT;

typedef pcl::PointCloud<PointT> PointCloudT;

using namespace pcl;

int main(int argc,char* argv[])

{

// The point clouds we will be using

PointCloudT::Ptr cloud_in(new PointCloudT); // Original point cloud

PointCloudT::Ptr cloud_in2(new PointCloudT); // Transformed point cloud

PointCloudT::Ptr cloud_icp(new PointCloudT); // ICP output point cloud

PointCloudT::Ptr corrspond_in(new PointCloudT); // Original point cloud

PointCloudT::Ptr corrspond_out(new PointCloudT); // Transformed point cloud

PointCloudT::Ptr show_transformed_clouds(new PointCloudT);

std::mutex cloud_mutex_;

// Checking program arguments

if (argc < 3)

{

printf("Usage :\n");

printf("\t\t%s file.ply number_of_ICP_iterations\n", argv[0]);

PCL_ERROR("Provide one ply file.\n");

return (-1);

}

pcl::console::TicToc time;

time.tic();

if (pcl::io::loadPLYFile(argv[1], *cloud_in) < 0)

{

PCL_ERROR("Error loading cloud %s.\n", argv[1]);

return (-1);

}

if (pcl::io::loadPLYFile(argv[2], *cloud_in2) < 0)

{

PCL_ERROR("Error loading cloud %s.\n", argv[2]);

return (-1);

}

std::cout << "\nLoaded file " << argv[1] << " (" << cloud_in->size() << " points) in " << time.toc() << " ms\n" << std::endl;

Eigen::Matrix4d transformation_matrix = Eigen::Matrix4d::Identity();

PointT point_in_1(-0.201973, -0.326478, 1.578000, 0, 0, 0);

PointT point_in_2(-0.328673, -0.325839, 1.616000, 0, 0, 0);

PointT point_in_3(-0.297260, -0.269961, 1.730000, 0, 0, 0);

PointT point_in_4(-0.169498, -0.276550, 1.696000, 0, 0, 0);

corrspond_in->push_back(point_in_1);

corrspond_in->push_back(point_in_2);

corrspond_in->push_back(point_in_3);

corrspond_in->push_back(point_in_4);

PointT point_in_5(0.165862, -0.074352, 1.631000, 0, 0, 0);

PointT point_in_6(0.290257, -0.068296, 1.623000, 0, 0, 0);

PointT point_in_7(0.298880, -0.133429, 1.522000, 0, 0, 0);

PointT point_in_8(0.175316, -0.138128, 1.515000, 0, 0, 0);

corrspond_out->push_back(point_in_5);

corrspond_out->push_back(point_in_6);

corrspond_out->push_back(point_in_7);

corrspond_out->push_back(point_in_8);

cout << corrspond_in->size() << endl;

cout << corrspond_out->size() << endl;

//利用SVD方法求解变换矩阵

pcl::registration::TransformationEstimationSVD<pcl::PointXYZRGB, pcl::PointXYZRGB> TESVD;

pcl::registration::TransformationEstimationSVD<pcl::PointXYZRGB, pcl::PointXYZRGB>::Matrix4 transformation2;

TESVD.estimateRigidTransformation(*corrspond_in, *corrspond_out, transformation2);

cout << transformation2 << endl;

pcl::transformPointCloud(*cloud_in, *show_transformed_clouds, transformation2);

*show_transformed_clouds += *cloud_in2;

pcl::PCDWriter writer;

writer.write("registered.pcd", *show_transformed_clouds, false);

return (0);

}

cmake_minimum_required(VERSION 2.6 FATAL_ERROR)

project(transform_twoside)

find_package(PCL 1.5 REQUIRED)

include_directories(${PCL_INCLUDE_DIRS})

link_directories(${PCL_LIBRARY_DIRS})

add_definitions(${PCL_DEFINITIONS})

add_executable (transform_twoside transform_twoside.cpp)

target_link_libraries (transform_twoside ${PCL_LIBRARIES})

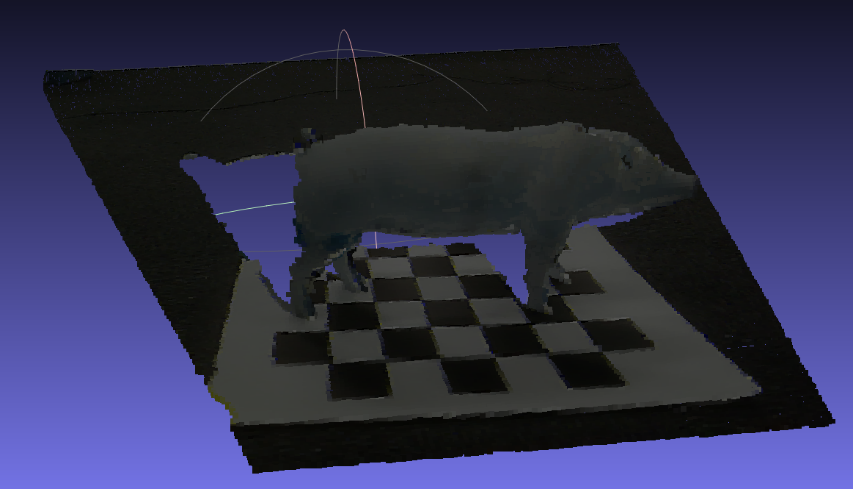

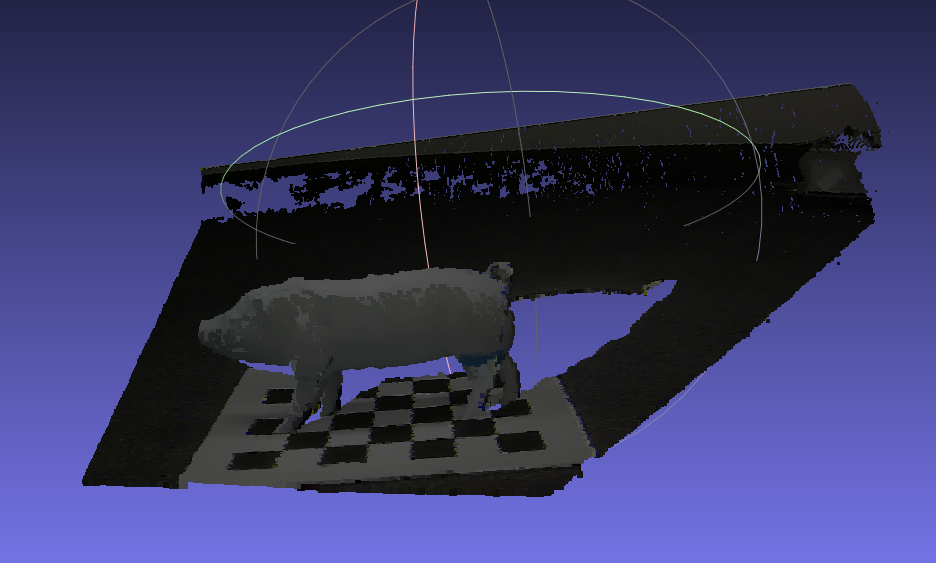

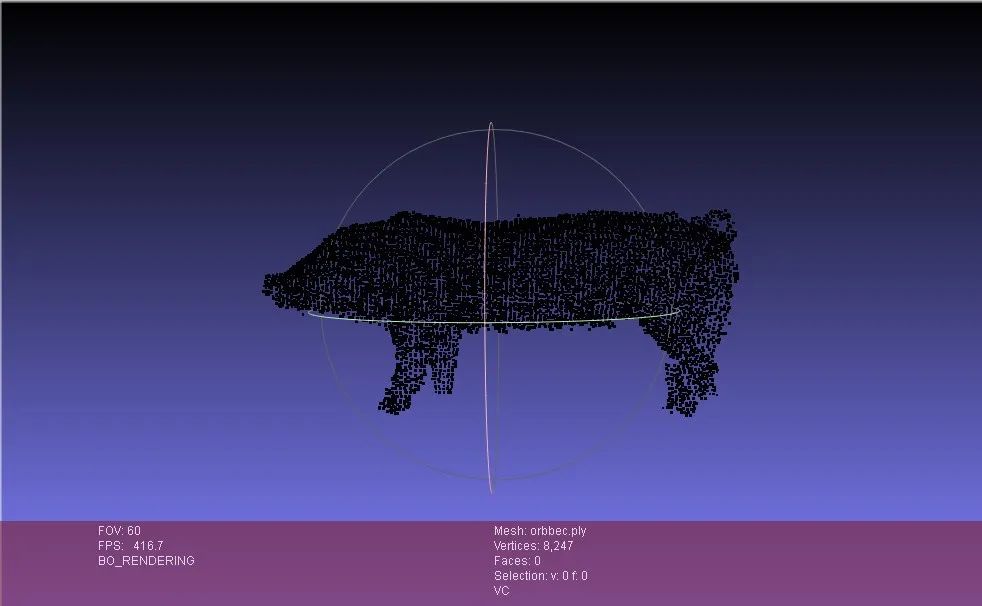

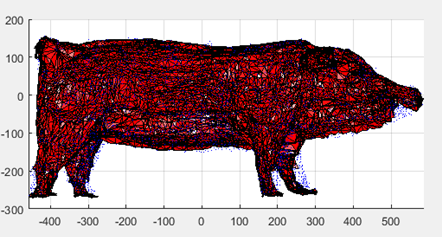

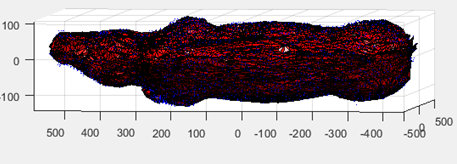

配准后数据:

四、姿势归一化

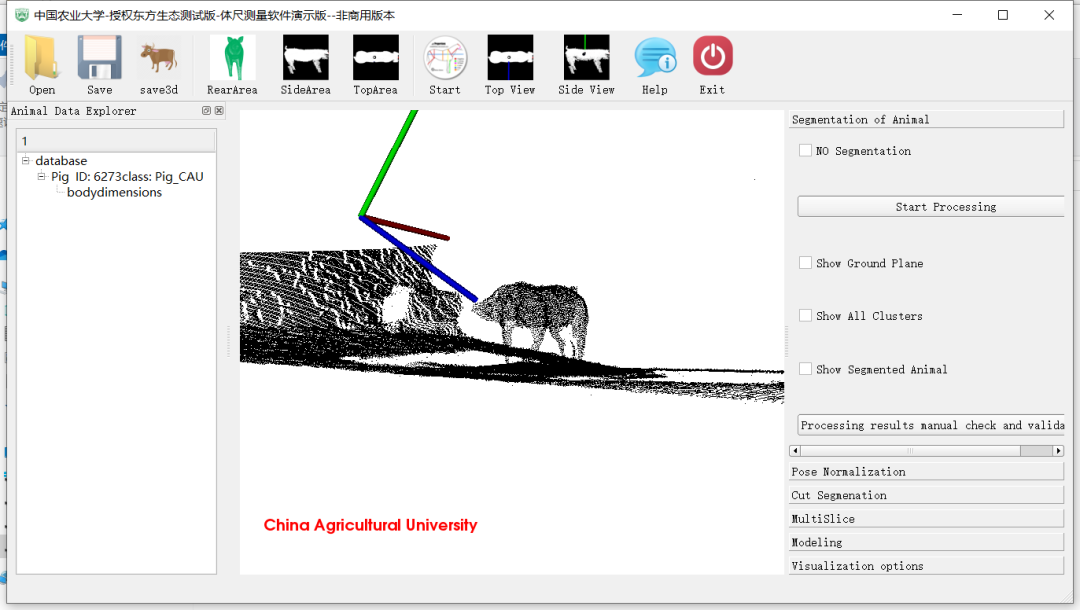

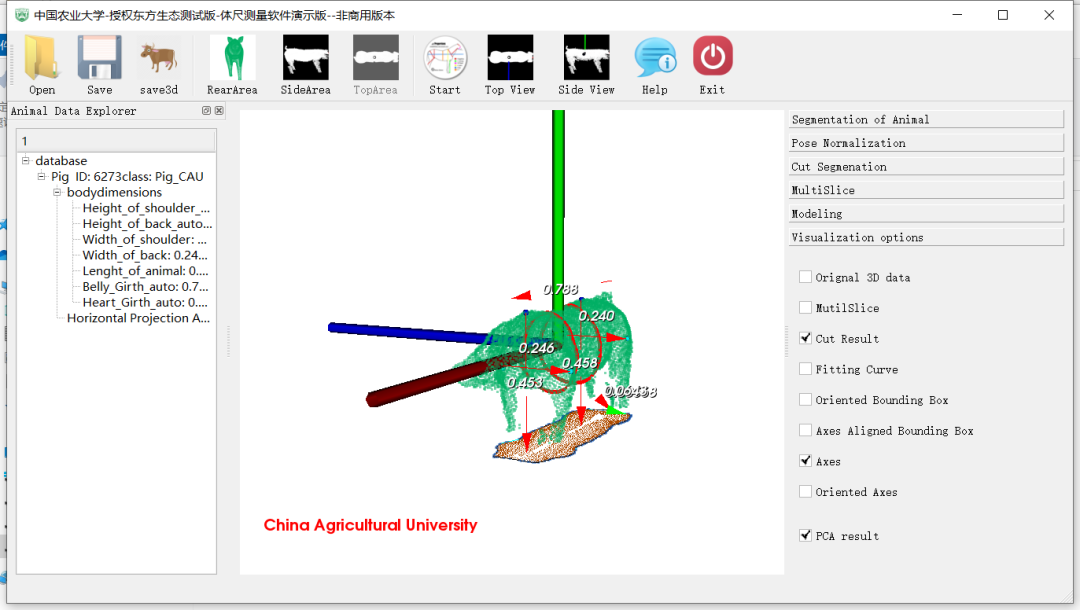

软件处理方法(自主研发)

@article{GUO201760,

title = "LSSA_CAU: An interactive 3d point clouds analysis software for body measurement of livestock with similar forms of cows or pigs",

journal = "Computers and Electronics in Agriculture",

volume = "138",

pages = "60 - 68",

year = "2017",

issn = "0168-1699",

doi = "https://doi.org/10.1016/j.compag.2017.04.014",

url = "http://www.sciencedirect.com/science/article/pii/S0168169917301746",

}

@article{GUO201959,

title = "A bilateral symmetry based pose normalization framework applied to livestock body measurement in point clouds",

journal = "Computers and Electronics in Agriculture",

volume = "160",

pages = "59 - 70",

year = "2019",

issn = "0168-1699",

doi = "https://doi.org/10.1016/j.compag.2019.03.010",

url = "http://www.sciencedirect.com/science/article/pii/S0168169918314893",

}

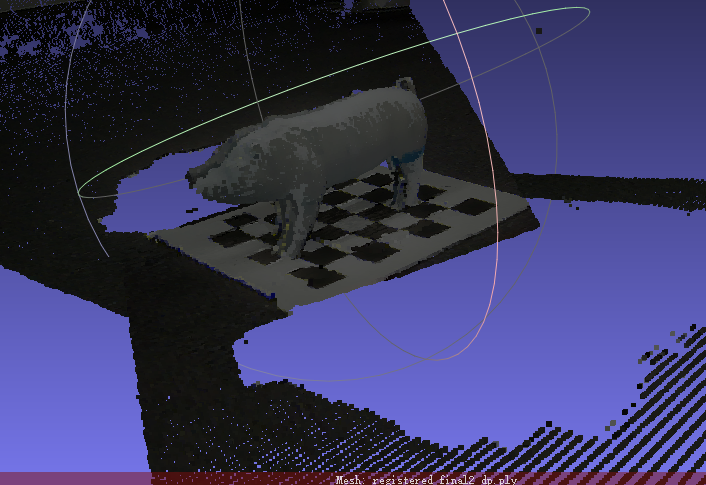

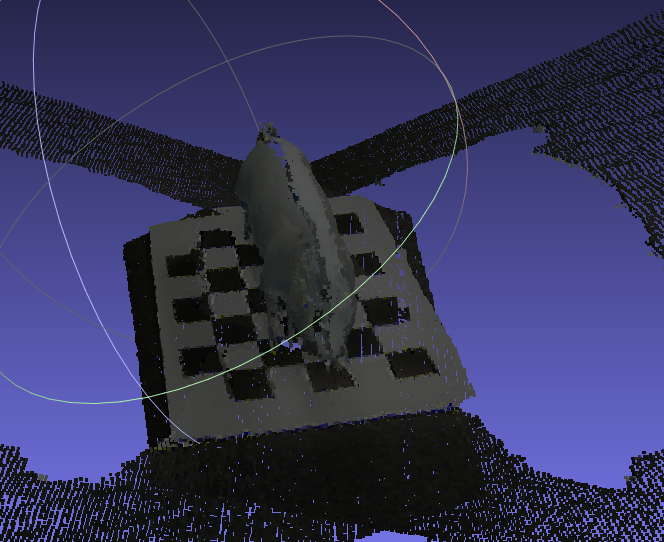

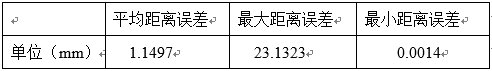

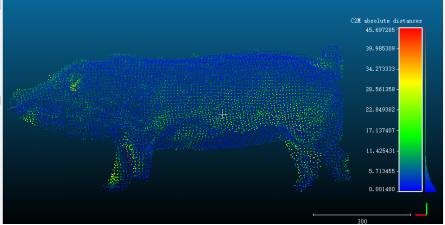

处理结果:

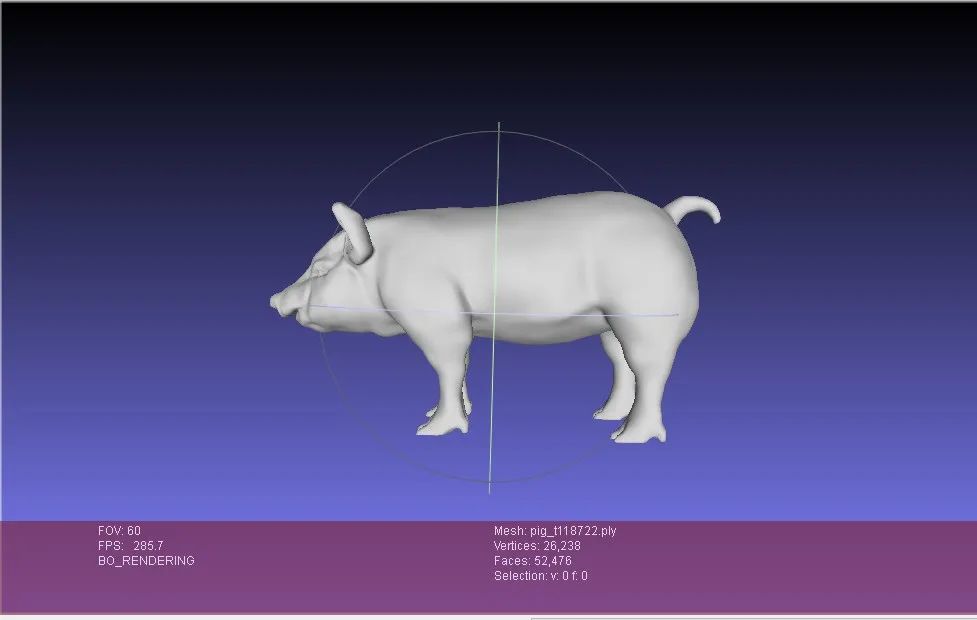

五、三维重建

数据准备

扫描数据:第四章获取的归一化后猪的点云数据

另外还需要选取一些特征点,使配准效果更好。因此,我们根据农业上猪的体尺测量,在模板数据和扫描数据上分别选取了37个对应的特征点。

初始化

非刚配准

结果评价

六、Demo演示

重磅!3DCVer-学术论文写作投稿 交流群已成立

扫码添加小助手微信,可申请加入3D视觉工坊-学术论文写作与投稿 微信交流群,旨在交流顶会、顶刊、SCI、EI等写作与投稿事宜。

同时也可申请加入我们的细分方向交流群,目前主要有3D视觉、CV&深度学习、SLAM、三维重建、点云后处理、自动驾驶、多传感器融合、CV入门、三维测量、VR/AR、3D人脸识别、医疗影像、缺陷检测、行人重识别、目标跟踪、视觉产品落地、视觉竞赛、车牌识别、硬件选型、学术交流、求职交流、ORB-SLAM系列源码交流、深度估计等微信群。

一定要备注:研究方向+学校/公司+昵称,例如:”3D视觉 + 上海交大 + 静静“。请按照格式备注,可快速被通过且邀请进群。原创投稿也请联系。

▲长按加微信群或投稿

▲长按关注公众号

▲长按关注公众号

3D视觉从入门到精通知识星球:针对3D视觉领域的知识点汇总、入门进阶学习路线、最新paper分享、疑问解答四个方面进行深耕,更有各类大厂的算法工程人员进行技术指导。与此同时,星球将联合知名企业发布3D视觉相关算法开发岗位以及项目对接信息,打造成集技术与就业为一体的铁杆粉丝聚集区,近2000星球成员为创造更好的AI世界共同进步,知识星球入口:

学习3D视觉核心技术,扫描查看介绍,3天内无条件退款

圈里有高质量教程资料、可答疑解惑、助你高效解决问题 觉得有用,麻烦给个赞和在看~