大合集!近两年目标跟踪资源全汇总(论文、模型代码、优秀实验室)

共 6910字,需浏览 14分钟

· 2020-08-12

点击蓝字

关注我们

极市导读:目标跟踪是计算机视觉领域中非常热门且具有挑战性的研究主题之一。近年来,基于深度学的的目标跟踪取得了巨大的进展,为了方便大家研究学习,极市整理了一份2019年至2020年的目标跟踪相关资源合集,包括顶会论文(CVPR/ECCV/ICCV)、算法汇总以及目标跟踪国内外优秀实验室,详见下文:

顶会论文

(由于篇幅有限,以下每个分题均展示前5篇,完整版可前往阅读原文获取)

2020

CVPR2020(共33篇)

完整版汇总:https://bbs.cvmart.net/topics/2733

【1】How to Train Your Deep Multi-Object Tracker

作者|Yihong Xu, Aljosa Osep, Yutong Ban, Radu Horaud, Laura Leal-Taixe, Xavier Alameda-Pineda

论文|https://arxiv.org/abs/1906.06618

代码|https://github.com/yihongXU/deepMOT

【2】Learning a Neural Solver for Multiple Object Tracking

作者|Guillem Braso, Laura Leal-Taixe

论文|https://arxiv.org/abs/1912.07515

代码|https://github.com/dvl-tum/mot_neural_solver(PyTorch)

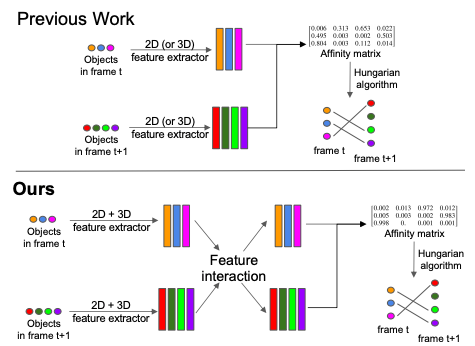

【3】GNN3DMOT: Graph Neural Network for 3D Multi-Object Tracking With 2D-3D Multi-Feature Learning

作者|Xinshuo Weng, Yongxin Wang, Yunze Man, Kris M. Kitani

论文|https://arxiv.org/abs/2006.07327

代码|https://github.com/xinshuoweng/GNN3DMOT(PyTorch)

【4】A Unified Object Motion and Affinity Model for Online Multi-Object Tracking

作者|Junbo Yin, Wenguan Wang, Qinghao Meng, Ruigang Yang, Jianbing Shen

论文|https://arxiv.org/abs/2003.11291

代码|https://github.com/yinjunbo/UMA-MOT

【5】Learning Multi-Object Tracking and Segmentation From Automatic Annotations

作者|Lorenzo Porzi, Markus Hofinger, Idoia Ruiz, Joan Serrat, Samuel Rota Bulo, Peter Kontschieder

论文|https://arxiv.org/abs/1912.02096

ECCV2020(共26篇)

完整版汇总:https://bbs.cvmart.net/topics/3097

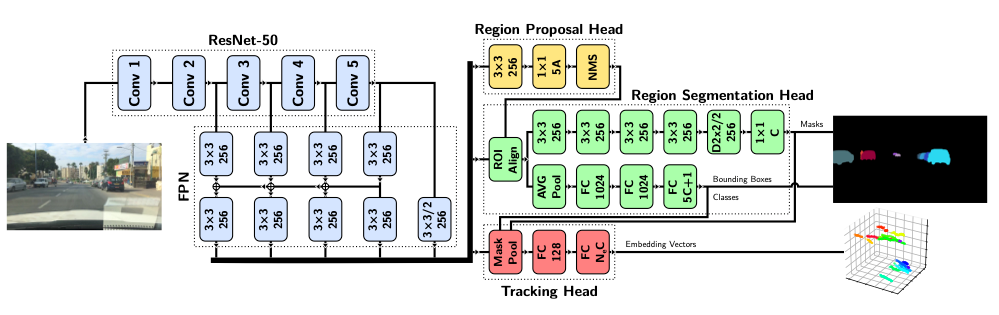

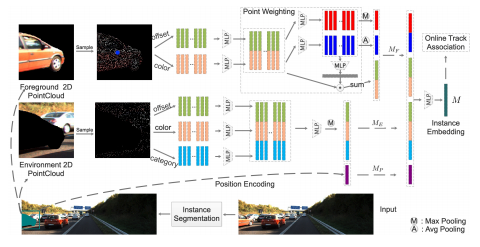

【1】Segment as Points for Efficient Online Multi-Object Tracking and Segmentation(Oral)

论文|https://arxiv.org/abs/2007.01550

作者|Zhenbo Xu, Wei Zhang, Xiao Tan, Wei Yang, Huan Huang, Shilei Wen, Errui Ding, Liusheng Huang

代码|https://github.com/detectRecog/PointTrack

【2】Chained-Tracker: Chaining Paired Attentive Regression Results for End-to-End Joint Multiple-Object Detection and Tracking

论文|https://arxiv.org/abs/2007.14557

作者|Jinlong Peng, Changan Wang, Fangbin Wan, Yang Wu, Yabiao Wang, Ying Tai, Chengjie Wang, Jilin Li, Feiyue Huang, Yanwei Fu

【3】TAO: A Large-scale Benchmark for Tracking Any Object

论文|https://arxiv.org/abs/2005.10356

作者|Achal Dave, Tarasha Khurana, Pavel Tokmakov, Cordelia Schmid, Deva Ramanan

代码|https://github.com/TAO-Dataset/tao

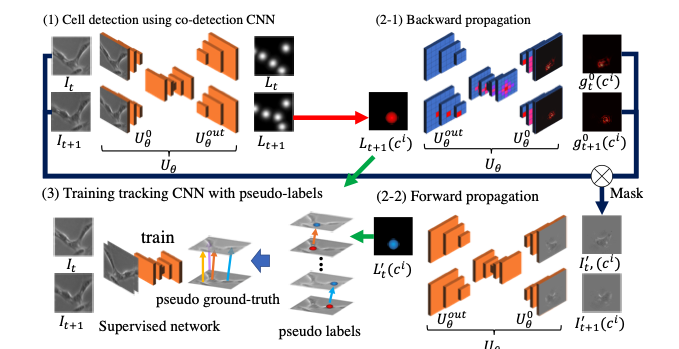

【4】Weakly-Supervised Cell Tracking via Backward-and-Forward Propagation

论文|http://arxiv.org/abs/2007.15258

作者|Kazuya Nishimura, Junya Hayashida, Chenyang Wang, Dai Fei Elmer Ker, Ryoma Bise

代码|https://github.com/naivete5656/WSCTBFP

【5】Towards End-to-end Video-based Eye-Tracking

论文|https://arxiv.org/abs/2007.13120

作者|Seonwook Park, Emre Aksan, Xucong Zhang, Otmar Hilliges

代码|https://ait.ethz.ch/projects/2020/EVE

2019

CVPR2019(共19篇)

完整版汇总:https://bbs.cvmart.net/articles/523

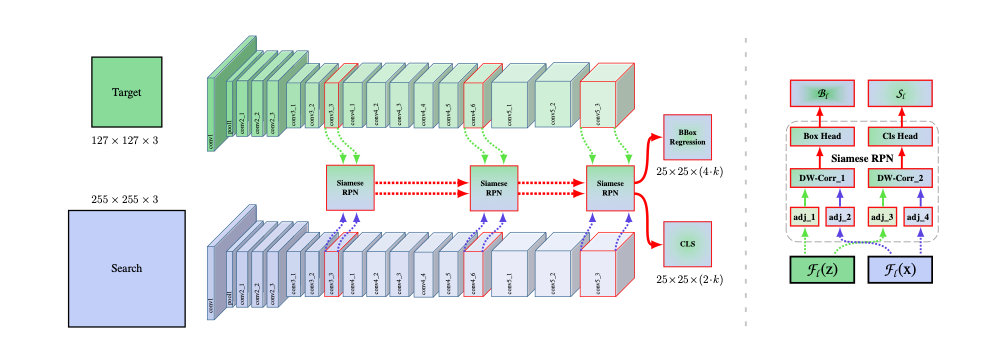

【1】SiamRPN++: Evolution of Siamese Visual Tracking With Very Deep Networks

作者|Bo Li, Wei Wu, Qiang Wang, Fangyi Zhang, Junliang Xing, Junjie Yan

论文|https://arxiv.org/abs/1812.11703

代码|https://github.com/STVIR/pysot

【2】Tracking by Animation: Unsupervised Learning of Multi-Object Attentive Trackers

作者|Zhen He, Jian Li, Daxue Liu, Hangen He, David Barber

论文|https://arxiv.org/abs/1809.03137

代码|https://github.com/zhen-he/tracking-by-animation

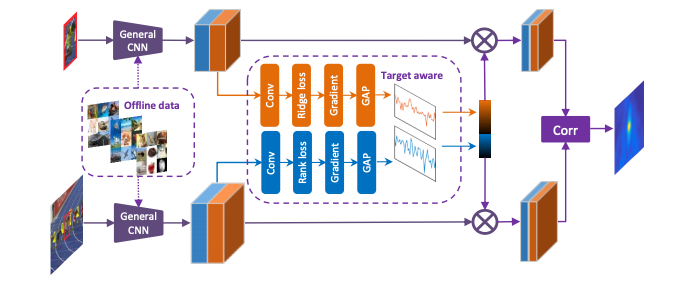

【3】Target-Aware Deep Tracking

作者|Xin Li, Chao Ma, Baoyuan Wu, Zhenyu He, Ming-Hsuan Yang

论文|https://arxiv.org/abs/1904.01772

代码|https://github.com/XinLi-zn/TADT

【4】SPM-Tracker: Series-Parallel Matching for Real-Time Visual Object Tracking

作者|Guangting Wang, Chong Luo, Zhiwei Xiong, Wenjun Zeng

论文|https://arxiv.org/abs/1904.04452

【5】Deeper and Wider Siamese Networks for Real-Time Visual Tracking

作者|Zhipeng Zhang, Houwen Peng

论文|https://arxiv.org/abs/1901.01660

代码|https://github.com/researchmm/SiamDW

ICCV2019(共11篇)

完整版汇总:https://bbs.cvmart.net/articles/1190

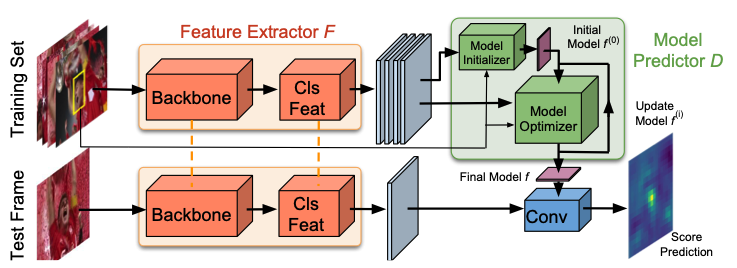

【1】Learning Discriminative Model Prediction for Tracking(Oral)

作者|Goutam Bhat, Martin Danelljan, Luc Van Gool, Radu Timofte

论文|https://arxiv.org/abs/1904.07220

代码|https://github.com/visionml/pytracking

【2】GradNet: Gradient-Guided Network for Visual Object Tracking

作者|Peixia Li, Boyu Chen, Wanli Ouyang, Dong Wang, Xiaoyun Yang, Huchuan Lu

论文|https://arxiv.org/abs/1909.06800

代码|https://github.com/LPXTT/GradNet-Tensorflow

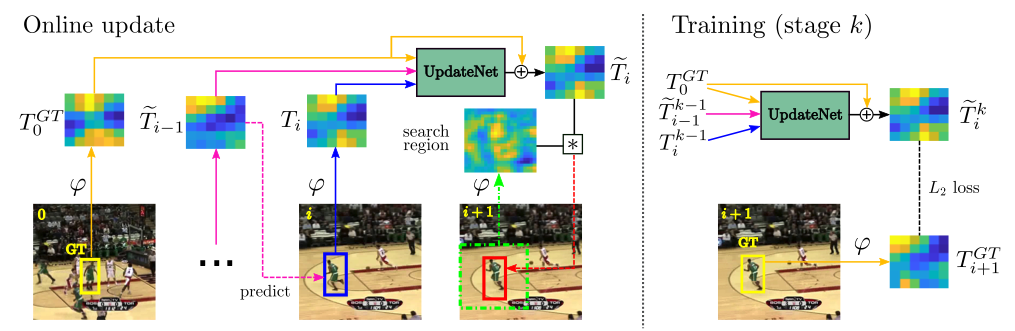

【3】Learning the Model Update for Siamese Trackers

作者|Lichao Zhang, Abel Gonzalez-Garcia, Joost van de Weijer, Martin Danelljan, Fahad Shahbaz Khan

论文|https://arxiv.org/abs/1908.00855

代码|https://github.com/zhanglichao/updatenet

【4】Skimming-Perusal' Tracking: A Framework for Real-Time and Robust Long-Term Tracking

作者|Bin Yan, Haojie Zhao, Dong Wang, Huchuan Lu, Xiaoyun Yang

论文|https://arxiv.org/abs/1909.01840

代码|https://github.com/iiau-tracker/SPLT

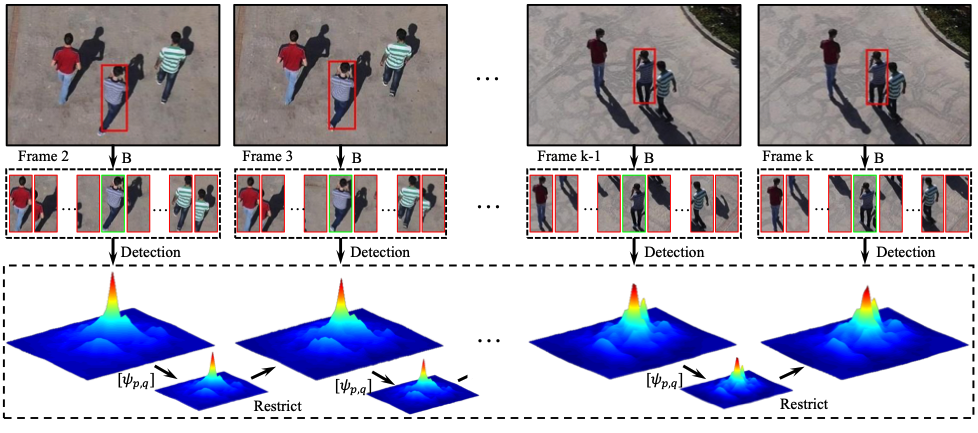

【5】Learning Aberrance Repressed Correlation Filters for Real-Time UAV Tracking

作者|Ziyuan Huang, Changhong Fu, Yiming Li, Fuling Lin, Peng Lu

论文|https://arxiv.org/abs/1908.02231

代码|https://github.com/vision4robotics/ARCF-tracker

算法

首先推荐两篇综述性论文:

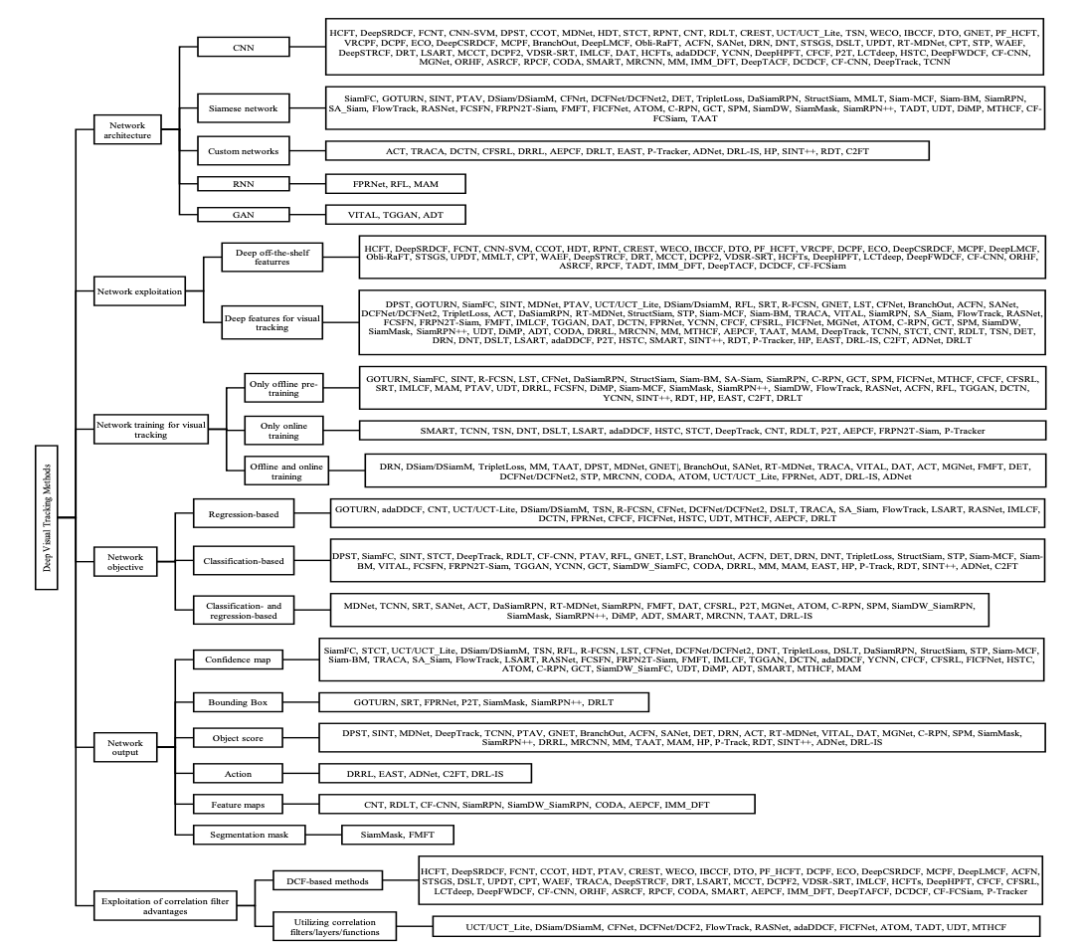

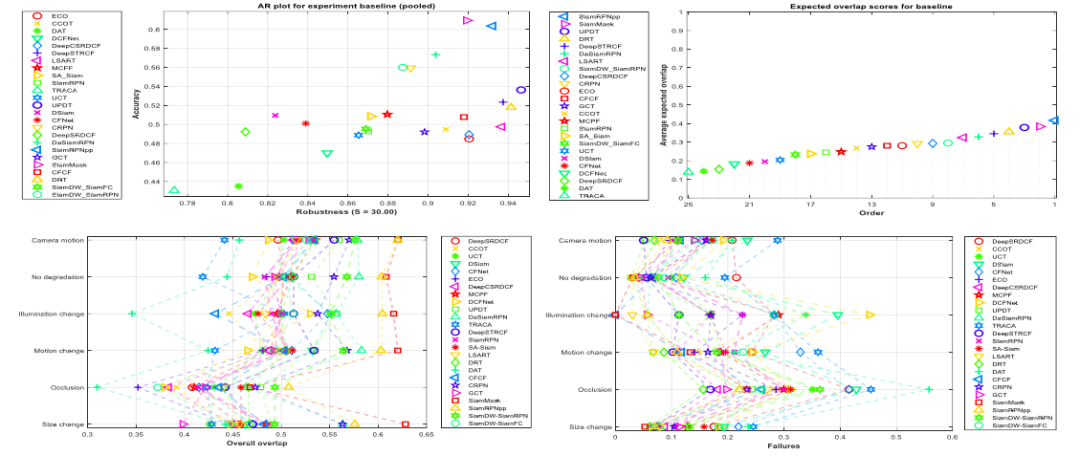

【1】Deep Learning for Visual Tracking: A Comprehensive Survey

论文链接:https://arxiv.org/pdf/1912.00535.pdf

23页、207篇参考文献的多目标跟踪综述(2013-2019)。在OTB2013、OTB2015、VOT2018和LaSOT上对基于深度学习的最新目标跟踪方法进行了全面综述:介绍14种常用视觉跟踪数据集,超过50种历年的SOTA算法(如SiamRPN++)。

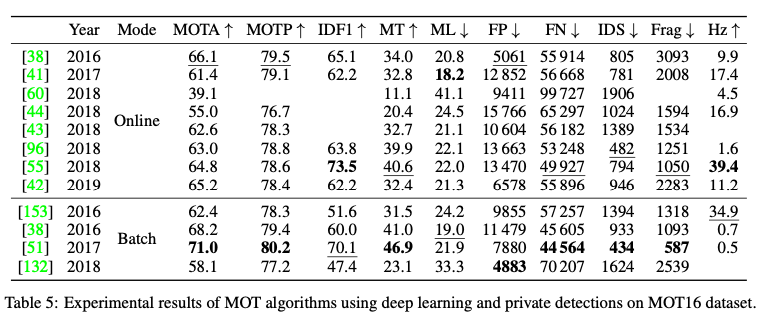

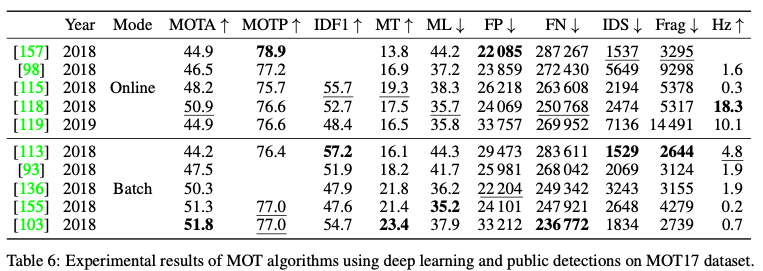

【2】DEEP LEARNING IN VIDEO MULTI-OBJECT TRACKING: A SURVEY

论文链接:https://arxiv.org/pdf/1907.12740v4.pdf

部分benchmark汇总:

完整版汇总:https://bbs.cvmart.net/articles/1190

【1】LaSOT:"Deep Meta Learning for Real-Time Visual Tracking based on Target-Specific Feature Space." arXiv (2018)

paper:https://arxiv.org/pdf/1809.07845.pdf

project:https://cis.temple.edu/lasot/

【2】OxUvA long-term dataset+benchmark:"Long-term Tracking in the Wild: a Benchmark." ECCV (2018)

paper:https://arxiv.org/pdf/1803.09502.pdf

project:https://oxuva.github.io/long-term-tracking-benchmark/

【3】TrackingNet: "TrackingNet: A Large-Scale Dataset and Benchmark for Object Tracking in the Wild." ECCV (2018)

paper:https://arxiv.org/pdf/1803.10794.pdf

project:https://silviogiancola.github.io/publication/2018-03-trackingnet/details/

实验室

(以下排名不分先后,如有遗漏,欢迎补充)

上海交通大学:林巍峣

https://www.sohu.com/a/162408443_473283

大连理工大学:卢湖川

http://ice.dlut.edu.cn/lu/

澳大利亚国立大学:Hongdong Li

http://users.cecs.anu.edu.au/~hongdong/

香港理工大学:Lei Zhang

http://www4.comp.polyu.edu.hk/~cslzhang/

中国科学院自动化研究所:张天柱

http://nlpr-web.ia.ac.cn/mmc/homepage/tzzhang/index.html

上海交通大学:CHAO MA

https://www.chaoma.info/

加州大学默塞德分校:Ming-Hsuan Yang

https://faculty.ucmerced.edu/mhyang/

卢布尔雅那大学卢布尔雅那大学:Matej Kristan

https://www.vicos.si/People/Matejk

哈佛大学:João F. Henriques,Luca Bertinetto

https://www.robots.ox.ac.uk/~joao/

Torr Vision Group

https://www.robots.ox.ac.uk/~tvg/people.php

苏黎世联邦理工学院:Martin Danelljan

https://users.isy.liu.se/cvl/marda26/

商汤科技tracking组:武伟,王强,朱政

腾讯 AI Lab 宋奕兵 https://ybsong00.github.io/

阿里巴巴:ET实验室

推荐阅读